The Future of AI-generated Imagery: A Step Forward

“With Midjourney 6, it’s now possible to rely entirely on AI images for my websites.”

Those words — spoken to me by a large independent online publisher — should strike fear into the hearts of anyone in the photography industry.

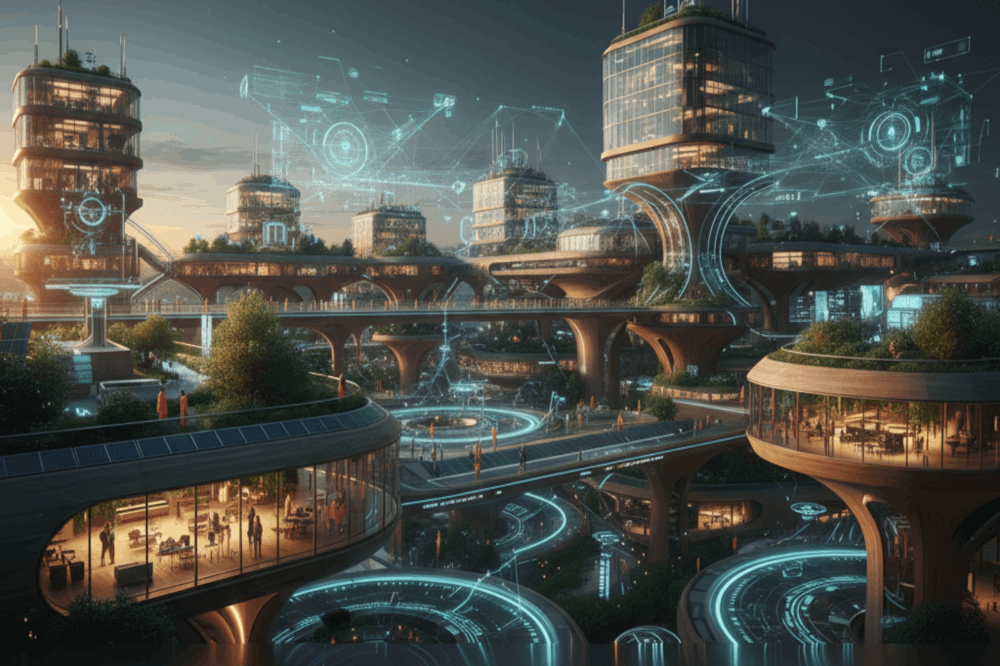

I agree with the publisher. Using Midjourney’s new Version 6 doesn’t initially feel revolutionary. But once you dig deeper, you begin to realize it’s a massive step forward for AI-generated imagery.

For a specific kind of photo, Midjourney’s output will indeed eliminate the need for human photographers. Here’s why — and how photographers must adapt.

AI image generators like DALL-E and Midjourney have become remarkably powerful in a remarkably short time.

I still vividly remember attending a conference for my industry trade group, the Digital Media Licensing Association, in 2019. DMLA brought in experts from big AI-focused companies like IBM and Microsoft to talk about the then-hot AI topic: automated tagging of objects in images.

The experts did indeed address that topic. But during lunch on the rooftop sundeck of the Mariott in Marina Del Rey, four of the AI panelists chose to sit together. I tagged along and listened in.

Chatting casually among themselves, these leading AI researchers and practitioners agreed on one thing: image tagging is cool, but give the tech a couple of years, and it will be generating new images based on a couple of sentences of text.

At the time, this seemed ludicrous. AI image generation was in its early, early infancy. It would still be two years before OpenAI would release the first version of DALL-E. And even when that happened, the initial output of AI image generators wasn’t exactly impressive.

As a photographer, it was easy to look at AI imagery examples like this one from 2021 and go “Nah, that’s not going to replace a human any time soon.” Of course, the experts turned out to be completely right.

In just a few short years, AI image generators surged ahead and were generating images that rivaled real photographs in their detail, realism, and visual power. Here’s the same (bizarre) armchair avocado prompt from the previous image, only rendered by DALL-E3 today.

We went from grainy, low-res, clipart-like images to beautiful, photorealistic renders in just under three years. It’s mind-blowing.

For certain types of images, the progress is even more remarkable. While early AI image generators were decent at creating realistic, close-up images of faces, they initially struggled to create more complex images of people that looked natural and convincing.

A simple look at the progression of people-centered images from Midjourney V1 (released in 2022) to Midjourney V5.1 (the most up-to-date version as of late 2023) illustrates how far these images have come, and how quickly.

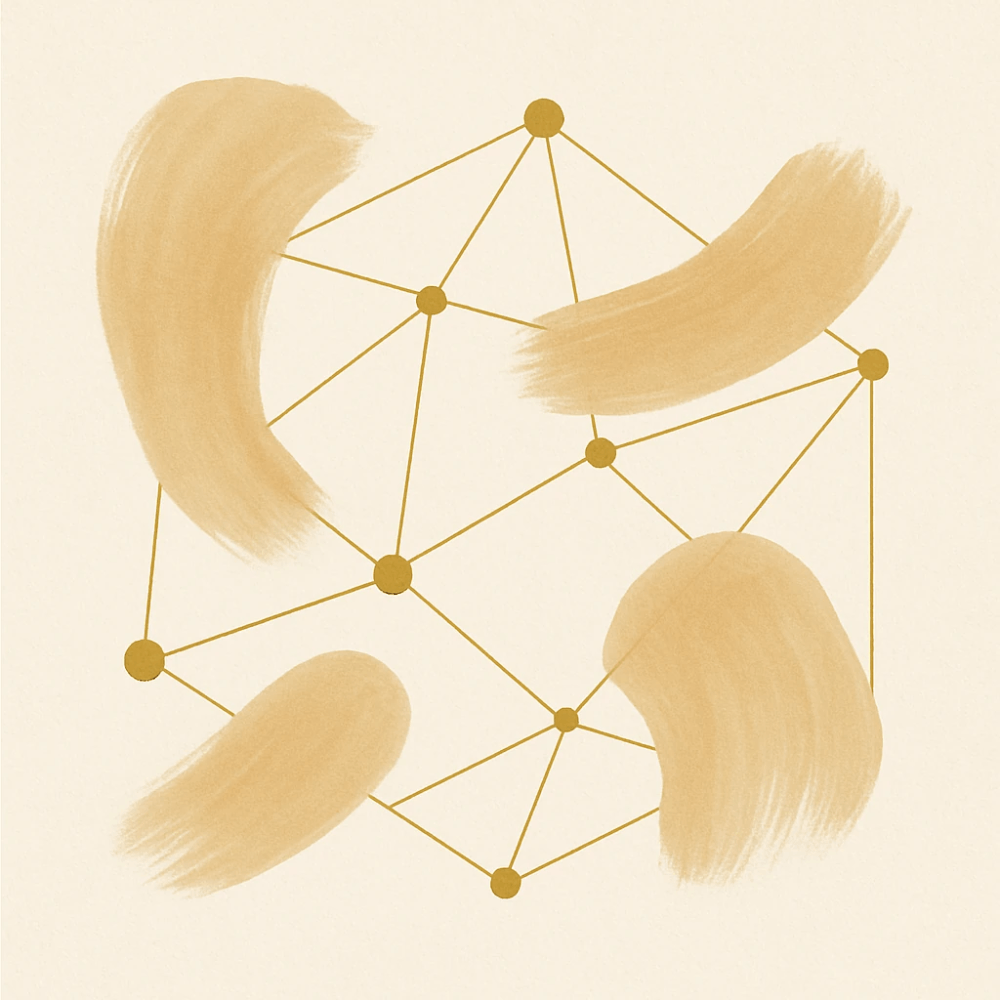

Still, despite all the rapid progress Midjourney V5.1 images weren’t perfect — or even necessarily very usable. They gave a semblance of reality, but were often still missing crucial details.

Version 5.1, for example, struggled to render hands realistically. Famously, AI-generated images often showed people with six fingers, four fingers, or no fingers at all.

For all its power, Version 5.1 also created images that still had a somewhat cartoony look.

This image, which I created with 5.1, shows the wrong number of fingers on the person’s hand, with a weird bonus fingernail floating just below the clenched fist. The hand itself also looks too smooth — more like a drawing of a hand than the skin of an actual person.

These images, in other words, look good enough to make us think — for a split second — that they might be real.

But after a second, it’s obvious that there’s something off about them. If anything, they look worse than a simple illustration or clipart image would look.

Out of the Uncanny Valley

Getting close to realistic — yet falling short — is creepier than sticking with abstraction. A semi-realistic hand with float bits of finger looks way skeevier than a cartoonish hand with the right number of digits.