The Evolution of Artificial Intelligence in Healthcare

Artificial intelligence (AI) refers to the simulation of human intelligence by machines, specifically computer systems. These machines can mimic cognitive functions associated with the human brain such as learning, memory, problem-solving and decision-making. The field of AI was born at a conference at Dartmouth College in 1956 where John McCarthy, an American computer scientist and cognitive scientist, coined the term ‘Artificial Intelligence’. Since then, many subsets of AI have been introduced with the aim of conferring computers with the ability to perform tasks regarded as strictly human.

Most of the AI that we hear about today — self driving cars, manufacturing robots, disease mapping — rely heavily on machine learning, deep learning and natural language processing.

Natural language processing is a branch of AI that bridges that gap between human communication and computer understanding. It allows the computer to extract meaning from human language and make decisions based on the information. Operating softwares such as Siri and Amazon Alexa are prime examples of natural language processing.

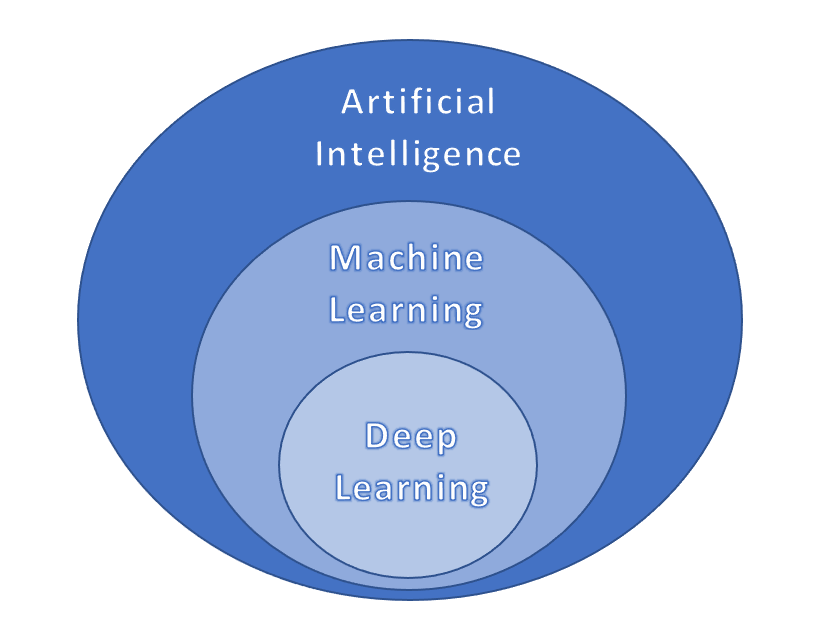

Both deep learning and machine learning are more advanced subsets of AI. Machine learning is a relatively old subset of AI that teaches a computer how to learn like a human does. It involves the use of data and algorithms to help a computer learn how to do a specific task without direct intervention. The more data you provide to the machine, the better it gets at the task.

Despite this incredible technological development, researchers found that machine learning models were struggling with basic problems that young children were solving with ease. The problem wasn’t the concept with machine learning per say, it was more to do with the oversimplification of neural networks associated with machine learning. It shouldn’t come to much of a surprise that a network of thousands of neurons didn’t have the same capabilities as an 86 billion neuron human brain. So, researchers came up with a further advancement known as ‘deep learning’.

Deep learning is a more focused expansion of machine learning that enables systems to group data and make predictions with greater accuracy and less human intervention. Deep learning consists of a neural network of three or more layers. These neural networks attempt to more accurately simulate the cognitive abilities of the human brain, allowing it to digest and learn from larger amounts of data. While we are still a long way off from mimicking the human brain, deep learning is a move in the right direction. Take for an example a self-driving car. The car must be able to know its next move, recognize pedestrians, bikes, road signs, bends in the road and act accordingly. Even though it has never been exposed to these specific stimuli before, it will still be able to make decisions due to its ‘deep’ neural network. Schematic by Benjamin Musrie with permission

Schematic by Benjamin Musrie with permission

So how can we apply this type of technology today? One field of interest is the healthcare sector. A new healthcare revolution is upon us. The relationship between doctor and patient, methods of treatment and diagnosis, and the management and organisation of health systems are quickly changing. Technological advancements such as AI, robotics and digital health are the main drivers of this change fueled by increasing availability of healthcare data and rapid progress of analytics techniques (Jiang et al., 2017).

In 1950, it took approximately 50 years for amount of data in the medical field to double. By 2010, that timeframe had reduced to just 3.5 years. In 2020, it was projected to only take 73 days (Densen, 2011).

Organising, comparing and studying this data could reveal answers to questions about diseases that researchers have been dedicating their lives to answering. It is not feasible for humans to manually process this colossal amount of data today, which is why we have turned to AI. AI has demonstrated the ability to handle and process this type of data at speeds and efficiencies that are dizzying for the human mind.

Popular AI techniques utilised in healthcare include machine learning, modern deep learning and natural language processing. These techniques are being used primarily in diagnosis, management, treatment and prognosis and are exerting their main benefits in terms of efficiency and precision. AI, however, has rarely been implicated in clinical practice. It is more prevalent in research labs and tech firms so there is no need to worry about robot doctors…yet

In diagnostics, AI has allowed health professionals to give earlier and more accurate diagnostics for many kinds of diseases (Sajda, 2006). For example, gene expression, which is a very important diagnostic tool, can be analysed using machine learning, in which an algorithm can detect any abnormalities (Molla, Waddell, Page & Shavlik, 2004). A relatively new application is to classify cancer microarray data for cancer diagnosis (Tham et al., 2017). Additionally, it can help predict the survival rates of cancer patients, such as those with colon cancer (Ahmed, 2005).

AI is now being applied to important biomedical tests such as electroencephalography (EEG), electromyography (EMG), and electrocardiography (ECG). EEGs help in diagnosing and predicting epileptic seizures. Predicting seizures is vital to minimise the damage caused to patients. In recent years, AI has been recognised as one of the key elements of an accurate and reliable prediction system which is achieved through deep learning (Bou Assi et al., 2017; Fergus et al., 2015). The best performing deep-learning model is a supervised deep convolutional autoencoder (SDCAE) model. Using a public dataset collected from the Children’s Hospital Boston (CHB) and the Massachusetts Institute of Technology (MIT), this model obtained 98.79 ± 0.53% accuracy, 98.72 ± 0.77% sensitivity, 98.86 ± 0.53% specificity and 98.86 ± 0.53% precision, making it one of the most effective seizure prediction systems worldwide (Abdelhameed & Bayoumi, 2021).

Machines also have the potential to provide insights to create streamlined treatment options. Immunotherapy is one of the most promising avenues for treating cancer — using the body’s own immune system to fight stubborn tumours. Immunotherapy, however, works better in some cancers than others and while they are effective for some patients, they fail to work in others.

Machine/deep learning algorithms and their ability to organise and extract key information from clinical datasets, have shown be in useful in further developing cancer immunotherapy.

“Recently, the most exciting development has been checkpoint inhibitors,” explained Long Le, MD, PhD, Director of Computational Pathology and Technology Development at the MGH Center for Integrated Diagnostics. Checkpoint proteins prevent the immune responses from doing their job of attacking tumour cells. Blocking these checkpoint proteins — with an immune checkpoint inhibitor — allows the T cells to kill tumour cells. The main advantages associated with checkpoint inhibitors include greater survival time, higher potency against cancer cells and lower toxicity than standard chemotherapeutic agents.

However, he stresses the need for a greater patient population, “We definitely need more patient data. The therapies are relatively new, so not a lot of patients have been put on these drugs. So, whether we need to integrate data within one institution or across multiple institutions is going to be a key factor in terms of augmenting the patient population to drive the modelling process.”

More recent applications of AI include examination of radiographs and histology slides. In 2018, researchers at Seoul National University Hospital and College of Medicine developed an automated system — Deep Learning based Automatic Detection (DLAD) — that can accurately classify chest radiographs and detect abnormal cell growth. The algorithms performance was compared to physician’s detection abilities on the same images where it was shown to outperform 17 of the 18 doctors (Nam et al., 2018)

Another algorithm comes from researchers at Google AI healthcare who in 2018, created the Lymph Node Assistant (LNA). The LNA is a form of deep learning AI for identifying cancer cells in lymph node biopsies. The technology was able to identify suspicious regions undistinguishable to the human eye in the biopsy samples given. LNA was tested on two different data sets and was shown to accurately classify a sample as cancerous or non-cancerous correctly 99% of the time. Additionally, pathologists working alongside LNA’s were more accurate and significantly faster at reviewing than pathologists and algorithms alone. Google suggests that these algorithms may be used in conjunction with physicians to allow for a more streamlined process.

All these findings exemplify the potential strength of algorithms in healthcare. So, what is holding them back from widespread clinical use? Image by Jenny Liu with permission

Image by Jenny Liu with permission

For one, the irreplaceable doctor-patient relationship. The area where humans will always outperform AI is emotional intelligence. The empathy and compassion displayed by doctors is very uniquely human. At present, it is unlikely that you would trust a robot or algorithm with a life-altering decision. If we don’t trust machines to take blood samples, we will definitely want doctors holding our hands when telling us a life-changing diagnosis. However, by getting AI to handle more time-consuming, monotonous tasks, it will allow for doctors to focus on other tasks such as understanding the day-to-day patterns and needs of their patients. American cardiologist, Eric J. Topol, recognises how AI may revolutionise the doctor-patient relationship and even the doctor selection process, “The greatest opportunity offered by AI is not reducing errors or workloads, or even curing cancer: it is the opportunity to restore the precious and time-honoured connection and trust — the human touch — between patients and doctors. Not only would we have more time to come together, enabling far deeper communication and compassion, but also we would be able to revamp how we select and train doctors.”

The introduction of AI will also have implications for the healthcare workforce. According to a report from the World Economic Forum, 85 million jobs will be replaced by machines with AI by the year 2025. While this number may sound alarming, the same report states that 97 million new jobs will be created by 2025 due to AI. In healthcare specifically, there has been some difficulty in integrating AI into workflows and electronic health record systems which has resulted in a lack of job impact. When those issues are resolved, the healthcare jobs most likely to become automated will be those dealing with digital information and void of direct patient contact like radiology and pathology.

But the penetration of AI into radiology and pathology will be slow because these jobs require more than reading and interpreting images. Radiologists consult with other physicians on diagnosis and treatment, perform image-guided medical interventions such as cancer biopsies and vascular stents, discuss test results with patients, and many other activities. Additionally, deep learning algorithms generally have one central focus, but these areas require multiple different tasks to be done with high accuracy. Creating a machine that could do it all with very high precision would prove to be difficult with the current technological state.

There are also huge ethical challenges that must be addressed before the widespread adoption of AI.

Firstly, transparency is already a big issue. Many AI algorithms — particularly deep learning algorithms — are impossible to explain. If a patient is informed of a life-threatening diagnosis or the need for emergency surgery, they will undoubtedly want to know why. Due to issues relating to protecting investment/intellectual property and cybersecurity risks, data and algorithms may not be available to the public and hence, be unable to explain why. For this reason, governmental or third-party auditing may be needed.

Furthermore, mistakes will undoubtedly happen with AI in patient diagnosis and treatment, and it may be difficult to establish accountability when this does happen. There may also be incidences where patients receive certain medical information from an AI robot that they would prefer to receive in an empathetic manner by a clinician.

AI is also limited to the data it is trained with, which bears a risk for bias. Several real-world examples have demonstrated algorithms tendency to amplify biases that can result in injustice regarding ethnic origins, skin colour and gender (Short, 2018; Cossins, 2018; Fefegha, 2018; Obermeyer, Powers, Vogeli & Mullainathan, 2019). Biased algorithms may lead to false diagnosis and/or suggest incorrect treatments and thus, jeopardize patient safety.

A well-publicized real-life example of this occurring is the IBM Watson for oncology. IBM Watson is a complex super algorithm that facilitates medical research, clinical research and offers healthcare advice for cancer. It has recently come under scrutiny for reportedly giving “unsafe and incorrect” recommendations for cancer treatment (Ross & Swetlitz, 2018). The issue stemmed from the training; the software was trained using just a few hypothetical cancer cases instead of real patient data as well as treatment recommendations from a few specialists as opposed to ‘guidelines and evidence’. According to STAT, documents from former IBM Watson Health Deputy Chief Health Officer Andrew Norden, MD — include patients’ assessments of Watson that say it produced “often inaccurate” recommendations that pose “serious questions about the process for building content and the underlying technology.” The documents also showed that several IBM employees told Dr. Norden the product was “very limited.”

It is important that governments and regulatory bodies monitor key issues and act accordingly. Healthcare/patient data has always been and will continue to be highly confidential and sensitive. It is essential that we maintain patient confidentiality and stay in control of such powerful information. The need for regulation has been highlighted by key AI development leaders like Elon Musk who has stated, “I’m increasingly inclined to think there should be some regulatory oversight, maybe at the national and international level just to make sure that we don’t do something very foolish.”

AI is an extremely powerful tool. We believe it has amazing potential in the healthcare industry of the future. While early efforts in diagnostics and treatments have proven challenging, we expect that AI will soon master these domains. The greatest challenge will be adopting these algorithms in daily clinical practice. There will also be a need for legislations and guidelines around their production, integration and use to ensure safe and reliable practice. It appears AI will not replace the human worker as statistics have shown that while jobs have been replaced by machines, machines in turn create new jobs. In saying this, it is likely that physicians will have to learn how to work alongside AI. With machines focussing on tasks like image analysis, it will allow clinicians to focus on tasks that require uniquely human skills like empathy, compassion and persuasion. The healthcare industry of the future will ultimately depend on the physicians themselves, as it seems that those who choose to adopt AI will replace those who don’t.