Understanding Machine Learning and Artificial Intelligence: An Introductory Guide

Machine learning and artificial intelligence (AI) are exciting fields that are transforming many industries. Here is an introduction to some of the key concepts, algorithms, applications and ethical considerations around machine learning and AI:

What is Machine Learning?

Machine learning is a subfield of artificial intelligence that enables computers to learn and improve from experience without being explicitly programmed. The goal of machine learning is to develop algorithms that can receive input data and use statistical analysis to predict an output value within an acceptable range.

Some of the most common types of problems solved using machine learning include:

- Classification: Assigning input data into different categories. Common algorithms include logistic regression, decision trees, random forests and support vector machines (SVM).

- Regression: Predicting a numeric value given an input. Common algorithms include linear regression, decision trees and neural networks.

- Clustering: Finding similarities between data points and grouping them based on patterns. Algorithms like k-means are used for clustering.

- Anomaly detection: Identifying abnormal or outlier data points that do not conform to expected patterns.

Machine learning algorithms build models by examining thousands or millions of examples, learning from those examples, and detecting patterns. This way, the models become more accurate through experience.

How Machine Learning Works

The machine learning process works as follows:

- Define the problem: Identify the task you want the machine learning model to perform. Common tasks include classification, regression, clustering, recommendation, etc.

- Prepare the data: Collect quality data relevant to the problem. Clean the data by handling missing values, converting data types, etc.

- Choose a model: Select the type of algorithm to use based on the problem. Common algorithms include linear regression, random forests, support vector machines, neural networks, etc.

- Train the model: Feed the data into the selected model to train it. The model learns patterns from the data.

- Evaluate the model: Test the performance of the trained model on new unseen data to evaluate accuracy, precision, recall, etc.

- Improve the model: Use techniques like hyperparameter tuning to improve model performance.

- Deploy the model: Integrate the model into applications and systems to automate tasks and generate predictions.

- Maintain the model: Monitor the model in production and re-train it as needed on new data to keep it current.

This general workflow is followed to apply machine learning to a wide range of real-world problems. The most important steps that require both domain expertise and machine learning knowledge are choosing the right data, algorithm and performance metrics for a given problem.

Types of Machine Learning Algorithms

There are 3 main categories of machine learning algorithms:

Supervised Learning

In supervised learning, the algorithms are trained using labeled examples. This means input data is accompanied by the desired output label or value. Some common supervised learning algorithms include:

- Linear regression - Predicts a numeric value. Used for regression problems.

- Logistic regression - Predicts a categorical value. Used for classification.

- Decision trees - Predicts a value by following a tree of rules. Handles categorical and numerical data.

- Random forest - Ensemble of decision trees avoids overfitting.

- Naive Bayes - Calculates conditional probability based on Bayes theorem. Used for classification.

- Support vector machines (SVM) - Finds optimal boundary between classes. Effective for high-dimensional data.

- Neural networks - Models complex non-linear relationships. Require large datasets.

Supervised learning is ideal for problems where labeled data is available to train models to predict future observations. Applications include spam filtration, fraud detection, image classification, speech recognition, and more.

Unsupervised Learning

In unsupervised learning, algorithms are provided with unlabeled input data. Their goal is to discover hidden patterns and groupings within the data. Some common unsupervised learning algorithms are:

- Clustering algorithms like k-means - Group data into clusters based on similarity.

- Association rule learning - Discover relationships between variables.

- Principal component analysis - Reduces data dimensionality while retaining most information.

- Neural networks like self-organizing maps - Can perform clustering.

Unsupervised learning is helpful for transactional and behavioral data analysis to segment customers, identify anomalies, perform market research and more.

Reinforcement Learning

In reinforcement learning, algorithms learn to maximize reward through trial-and-error interactions with their environment. Important reinforcement learning algorithms include:

- Q-learning - Learns an action-value function to identify optimal actions.

- Temporal difference learning - Predicts future state values based on current state.

- Deep Q networks - Uses deep neural networks to approximate Q-learning.

Reinforcement learning underlies technologies like self-driving cars, robotics, game AI, logistics and more. The algorithms learn optimal behavior through ongoing feedback from their environment.

Bias-Variance Tradeoff in Machine Learning

An important concept in machine learning is the bias-variance tradeoff. Bias refers to error due to oversimplifying the model. Variance refers to error due to excessive model sensitivity.

- High bias, low variance models - Tend to underfit training data. Have high error on training and test data. Cannot capture complexity in the data.

- High variance, low bias models - Tend to overfit training data. Have low training error but high test error. Adapt too closely to noise in training data.

- Low bias, low variance models - Generalize well to new data. Have low error on training and test. Balance model complexity with generalizability.

Tuning model hyperparameters helps control bias vs variance to optimize performance. For example, increasing model complexity can reduce bias but increase variance. Regularization methods like dropout in neural networks help reduce variance.

Evaluating Machine Learning Models

To measure performance, machine learning models are evaluated on an unseen test dataset after training. Common evaluation metrics include:

- Accuracy - Fraction of correct predictions. Used for classification.

- Precision - Fraction of positive predictions that are correct.

- Recall - Fraction of actual positives correctly predicted.

- F1 score - Balance of precision and recall.

- Mean absolute error (MAE) - Average deviation from actual value. Used for regression.

- Root mean squared error (RMSE) - Penalize larger errors. Used for regression.

- Area under ROC curve (AUC) - Model's ability to distinguish classes. Used for classification.

Based on the problem, metrics are chosen to optimize the models for application-specific needs like high recall for fraud detection or low RMSE for demand forecasting.

Examples of Machine Learning Applications

Here are some examples of machine learning applications across different industries:

Computer Vision

- Image classification - Label images based on objects contained in them. Used by social media apps to detect inappropriate content.

- Object detection - Locate instances of objects like stop signs in images or videos. Enables self-driving cars to detect obstacles.

- Image segmentation - Assign pixel-wise labels to images to isolate objects or regions of interest. Used in medical imaging analysis.

- Face recognition - Match faces in images or videos to identity of persons. Used for surveillance and security systems.

Powerful computer vision models like convolutional neural networks are trained on large labeled datasets of images. Data augmentation techniques like flipping, cropping and adding noise to existing images helps create more training data.

Natural Language Processing

- Sentiment analysis - Automatically detect positive, negative or neutral sentiment in text data like social media, surveys and reviews. Used by companies to monitor brand and product perception.

- Language translation - Translate text from one language to another. Used by companies to provide content and services to global users.

- Speech recognition - Transcribe and convert human speech into text. Powers voice assistants like Alexa, Siri and Google Assistant.

- Text generation - Generate coherent paragraphs of text based on a prompt. Used to automatically generate news stories or long-form content.

NLP models use word embeddings to represent words numerically as vectors. Recurrent neural networks like LSTMs and attention mechanisms enable learning sequential data like text.

Recommender Systems

- Product recommendations - Suggest products like retail items, movies or jobs to users based on their interests. Used heavily by Amazon, Netflix, LinkedIn.

- Similar content suggestions - Recommend similar articles, videos or posts based on current item. Used by YouTube, Facebook, Twitter.

Collaborative filtering analyzes patterns across user behaviors and items to model preferences. Content-based filtering relies on attributes of items themselves. Hybrid recommender systems combine both.

Fraud Detection

- Credit card fraud - Detect anomalous transactions to identify stolen cards or data. Saves costs for banks.

- Identity theft - Detect use of stolen consumer information to open fraudulent accounts.

- Insurance fraud - Identify suspicious claims like staged accidents to prevent bad payouts.

Supervised classifiers trained on past data of legitimate and fraudulent transactions can identify outliers and flag possible fraud. Features are engineered from transaction metadata like time, location, accounts, etc.

Predictive Maintenance

- Factory equipment diagnostics - Continuously monitor sensors on industrial machines to detect early warning signs of failure. Enables proactive maintenance.

- Infrastructure monitoring - Analyze large amounts of sensor data from roads, bridges, energy grids etc. to identify maintenance needs.

Time series models like ARIMA and regression models are trained to detect anomalies in sensor data and predict deterioration failure-prone components.

Customer Churn Prediction

- Telecom churn - Predict customers likely to cancel phone or internet service. Enables targeted retention campaigns.

- Subscription churn - Forecast users that will cancel online subscriptions like music or SaaS apps.

Features related to usage, engagement, support tickets, payments etc. are used to train classifiers on past customer behavior data to identify likely churners.

These examples demonstrate the wide variety of domains where machine learning is applied today. The key steps are identifying the business problem and relevant data sources, then matching the appropriate ML techniques.

Overview of Artificial Intelligence (AI)

Artificial intelligence is the broader field of studying how to achieve intelligence through computation. AI research aims to develop systems that exhibit characteristics associated with human intelligence like reasoning, learning, problem-solving, perception, creativity and more.

Some key components of AI include:

- Machine learning - Algorithms that can improve with data and experience. Subset of AI.

- Deep learning - Advanced machine learning using neural networks inspired by the brain.

- Natural language processing (NLP) - Processing and generating human language.

- Robotics - Creating intelligent autonomous machines.

- Computer vision - Algorithms to process and analyze visual data.

- Expert systems - Encode and leverage human domain knowledge logically.

- Planning - Sequential decision making using models to optimize outcomes.

- Speech recognition - Systems that can process human speech.

Artificial general intelligence (AGI) is the hypothetical ability of an AI system to understand and reason across multiple cognitive domains like a human. No technology has achieved true AGI yet. Current systems exhibit narrow or weak AI - intelligence limited to specific domains only.

AI applications are becoming increasingly common in our lives through virtual assistants, smartphone apps, recommendations engines, computer vision, autonomous vehicles and more. The goal is for machines to assist humans by automating repetitive work and augmenting human capabilities.

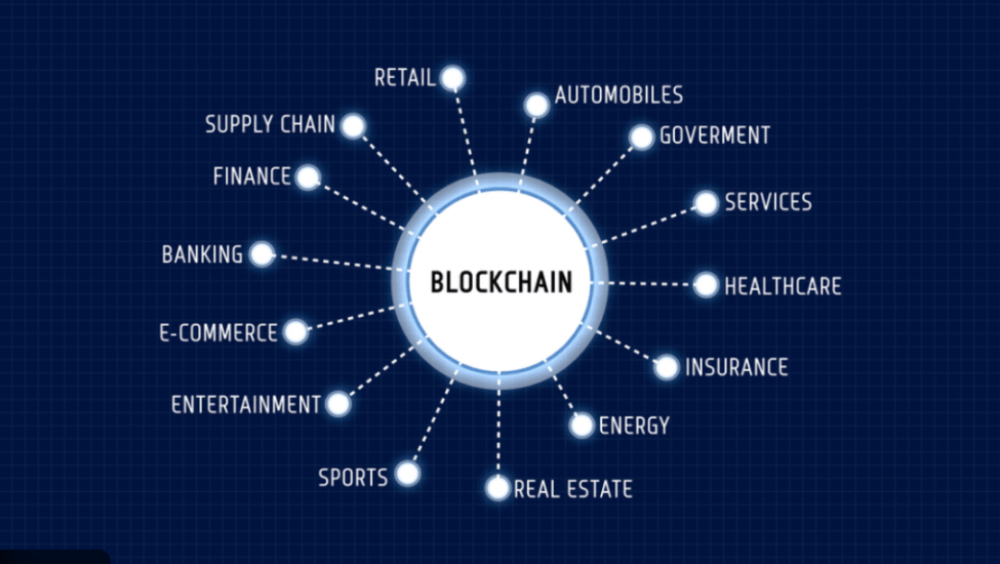

Machine Learning and AI Use Cases By Industry

Here are some examples of innovative ways machine learning and AI are being leveraged across various industries:

Healthcare

- Identify disease risk factors from patient data to improve preventive care

- Automate analysis of medical scans and tests for faster diagnosis

- Develop treatment plans and recommend medications tailored to patients

- Enable elderly monitoring solutions using computer vision and wearables

- Speed up new drug development through computational drug discovery

Finance

- Detect patterns of fraud and money laundering in large transaction datasets

- Build robo-advisors that provide automated investment advice and portfolio management

- Analyze news and data sources to generate trading signals that beat the markets

- Personalize financial product recommendations for banking customers

- Automate routine processes like loan underwriting, accounting and compliance audits

Transportation

- Pilot self-driving vehicles using computer vision, localization and mapping

- Optimize traffic management and plan efficient transportation routes

- Predict vehicle maintenance needs from engine data to minimize downtime

- Improve supply chain and logistics operations using predictive analytics

- Provide personalized transit recommendations for ridesharing and public transport

Agriculture

- Monitor crop, soil and climate conditions using drones and sensors to boost yields

- Leverage spectral imaging to automatically detect plant diseases and infestations

- Track livestock biometrics and behavior using wearables for health monitoring

- Run autonomous farm equipment like tractors and harvesters

- Match crops to ideal growing conditions using predictive analytics

Retail

- Recommend products to customers based on purchase history, searches and reviews

- Dynamically manage product inventory and pricing using demand forecasting

- Provide virtual shopping experiences using augmented reality

- Optimize staff scheduling and store layouts based on sales patterns

- Reduce customer churn through targeted retention campaigns

Media & Entertainment

- Automatically tag and index large media libraries using vision and language models

- Provide personalized recommendations for movies, music, books, etc.

- Moderate user-generated content like images or comments to reduce toxicity

- Synthesize new media content like text articles, images and videos

- Engage audiences through interactive AI-powered characters

This shows the tremendous potential for leveraging AI across diverse verticals. The key is carefully evaluating use cases where AI can augment human capabilities and drive business value.

Machine Learning Models in Depth

Here is an overview of some of the most popular machine learning models used today:

Linear Regression

Used for predicting continuous target variables. Fits a linear equation between the features and target:

y = b0 + b1x1 + b2x2 + ...

Minimizes the sum of squared residuals between actual and predicted values. Easy to implement but assumes linear relationship which is often not valid.

Logistic Regression

Used for binary classification problems. Models the probability of the target class using the logistic sigmoid function:

p = 1 / (1 + e^(-b0 + b1x1 + b2x2 + ...)

Can handle multiple numeric and categorical features. Fast to train but assumes linear decision boundaries.

Decision Trees

Create branch-like model that divides the feature space into partitions using simple decision rules. Can capture non-linear relationships.

Prone to overfitting on training data. Ensemble methods like random forest improve results by combining multiple trees.

Support Vector Machines (SVM)

Find optimal hyperplane in feature space that separates classes with maximum margin. Use kernel functions to transform data for non-linear cases.

Powerful for complex data but don't scale well to large datasets. Insensitive to outliers due to max-margin objective.

Naive Bayes

Applies Bayes' theorem to model probability of target class using assumption that features are independent. Simple but surprisingly effective for many problems.

Performs online updates efficiently as data comes in. However, independence assumption is often not valid in real data.

K-Nearest Neighbors

Non-parametric algorithm where new data points are classified based on similarity with k closest neighbors in training data.

Simple to implement but requires storing entire training dataset. Performance depends heavily on distance metrics used.

Clustering Algorithms

Group similar data points together without any labels. K-means is simple and popular. Hierarchical and density-based methods like DBSCAN also exist.

Useful for exploratory analysis but results lack semantic meaning. Number of clusters must be specified carefully.

Neural Networks

Model complex non-linear relationships using interconnected neurons organized in layers. Require large amounts of data.

Powerful capabilities but act as black-boxes. Many hyperparameters to tune. Deep learning methods are popular today.

This covers some of the most useful algorithms for supervised learning (regression, classification) and unsupervised learning (clustering) tasks.

Deep Learning Models

Deep learning uses neural networks with multiple hidden layers to learn hierarchical feature representations directly from the data. Some important deep learning models include:

Convolutional Neural Networks (CNNs)

- Used for computer vision tasks like image classification and object detection

- Apply convolutional filters to extract spatial features from images

- Add pooling layers to reduce dimensions while retaining salient features

Recurrent Neural Networks (RNNs)

- Useful for sequential data like text, time series and audio

- Have recurrent connections that process current input conditioned on past context

- LSTM and GRU cells overcome vanishing gradient problems in plain RNNs

Transformers

- Modern alternative to RNNs without recurrence, only attention mechanisms

- Allow highly parallelized processing of sequential data

- Form backbone of large pretrained language models like BERT and GPT-3

Autoencoders

- Perform unsupervised learning by reconstructing input

- Useful for dimensionality reduction, visualization and denoising

- Variational autoencoders impose constraints for more robust encoding

Generative Adversarial Networks (GANs)

- Composed of generator and discriminator neural networks

- Generator tries to create realistic synthetic data to fool the discriminator

- Used for image and video generation, image-to-image translation

Deep nets enable end-to-end feature learning from raw data and scale well with big datasets and compute power. However, they can become black-box models sensitive to minor input changes.

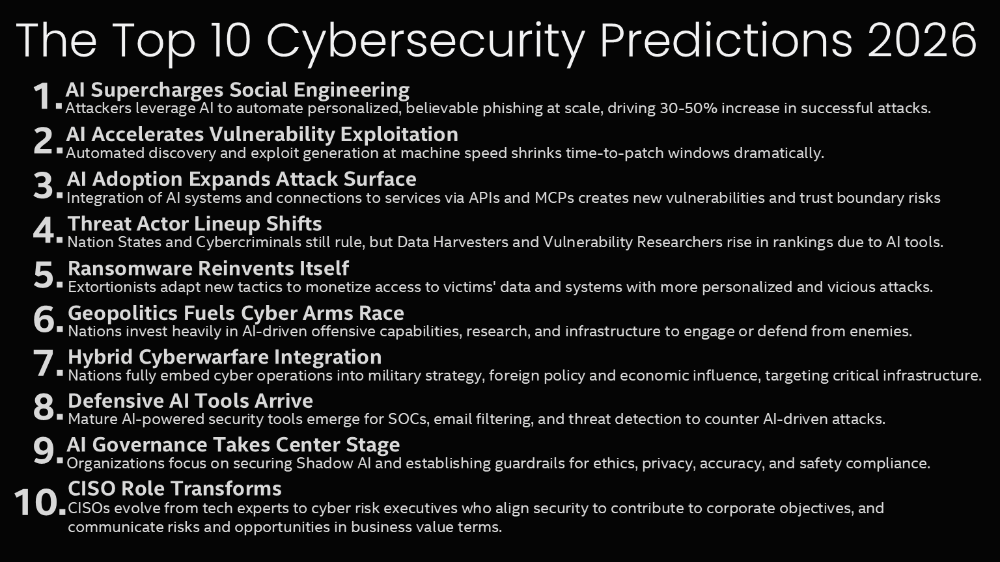

Ethical Considerations in AI

As AI systems become more capable, we must address potential ethical risks:

- Bias - Historical discrimination in data can lead to unfair models. Continual monitoring for skewed model behavior is needed.

- Explainability - Complex models like deep nets are black boxes. Techniques to understand model logic and decisions can improve transparency.

- Data Privacy - Growing reliance on user data raises concerns around consent, sharing and security vulnerabilities.

- Job Loss - Automation may disrupt certain jobs and industries. Policy changes must account for effects on income inequality.

- Fairness - Models should not arbitrarily discriminate based on race, gender or disadvantaged groups when making high-impact decisions.

- Safety - Autonomous systems like self-driving cars and robots interacting with humans pose physical risks if not thoroughly tested.

- Control - Greater reliance on AI requires ensuring alignment with human values and oversight against unintended harms.

To build trust in AI, companies and institutions must make ethical considerations a top priority.