The Great AI Scare: The real risks of AI are not what you think.Sci-fi occultism has spread to the

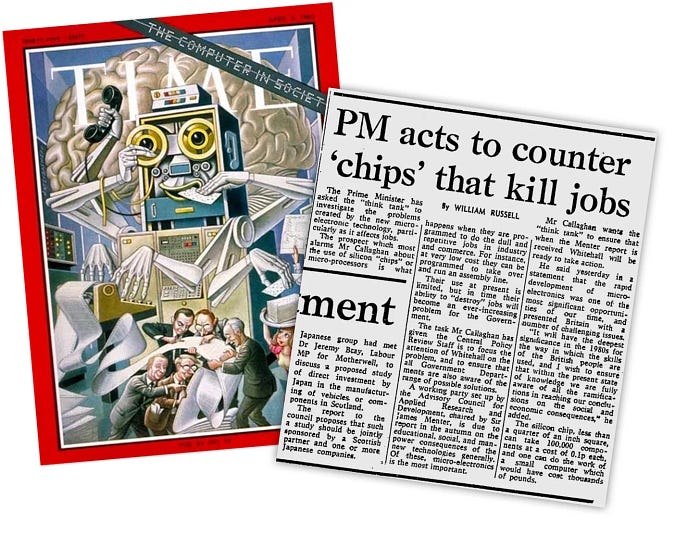

Press from the 1970s about computers automating jobs. Coursey of pessimistarchive.org

Press from the 1970s about computers automating jobs. Coursey of pessimistarchive.org

Pocket telephones, like most human inventions, have had a rocky history. First intended for military use, by November of 1992, ten million Americans had bought their first cell phone. But a few months later, a man called David Reynard appeared on Larry King Live, a popular talk show at the time, and the history of the cell phone took a new direction.

Reynard was grieving the death of his wife, who had died of cancer. In an emotional appearance, he claimed that her brain tumour was caused by her mobile phone. This television broadcast unleashed widespread, public fear about cell phones which lasted decades. Like so, Mr Reynard’s story set back cell phone adoption by months, if not years.

We’re confident now that mobile phones do not cause brain cancer. But in recent weeks, a new wave of fear has swept through the media, this time related to artificial intelligence.

Future generations may remember it as The Great AI Scare. Led by a small cohort of Silicon Valley tycoons and insiders, the discussion is being framed as an existential, pivotal moment not just for humanity, but for life on earth.

The hypothesised risks range from the modest and sensible to the outlandish and bizarre. However, in this article, I will show that they are all bogus.

I will discuss the three main concerns surrounding AI: a sci-fi apocalypse, mass unemployment, and mind-controlling propaganda, and show that they are unfounded. Just as with mobile phones in the 1990s, or automobiles in the 1920s, fantastical disasters are unlikely to come true.

If you’re interested in AI and the financial markets, consider joining us at Sharestep, an AI tool that transforms the stock market into a marketplace of ideas.

Bogus risk I: sci-fi apocalypse

The trouble with proving that outlandish predictions are nonsense, is that claims about the future cannot be disproved definitively, however strange they seem. For example, if you tell me we will soon be living in underwater cities, I suspect that you’re wrong— but I can’t prove it.

In such cases, it is usually better to question the person making the prediction, than the prediction itself. Ad hominem: question the person, not the argument. Is the person credible, competent and sound of mind? Does he have flaws that might affect his judgement? Why does he claim to have knowledge about the future? Ad hominem is sometimes the best way. Courtesy of Sheepforcomics

Ad hominem is sometimes the best way. Courtesy of Sheepforcomics

Let’s talk about sci-fi occultism. Sci-fi occultism, broadly, is the use of scientific rhetoric and language to argue non-scientific, non-falsifiable theories and hypotheses, which are often sensational and outlandish. As a technique, it gives the sci-fi fantasist carte blanche in the claims he can make about the future.

The high priests of sci-fi occultism include Nick Bostrom, a philosopher at Oxford University, and Ray Kurzweil, a self-described futurist who, to be polite, has made a career out of padding his résumé of achievements.

Anyone familiar with the high priests of sci-fi occultism has found themself intuiting something primordial. It’s a feeling we often have as children, but that we experience less as adults. It’s that feeling that something is not right with another child — that their mind is not well-aligned.

When Bostrom or Kurzweil talk, that same feeling arises — that sense that the man who speaks is off-road, on a different lane. Or as grandmothers say: in his own world.

But there is a person more than any other who is worth mentioning now — the man of the moment amongst preachers of techno end-times.

Enter Eliezer Yudkowsky, an elite Silicon Valley influencer.

He describes himself as an autodidact (a middle-class euphemism for not completing education), and has a colourful history with techno-futurism. He has worked closely with Ray Kurzweil, Nick Bostrom, and is on speaking terms with most of the Silicon Valley tycoons. He has, in the last ten years, done more than anyone else to spread tales of AI doom amongst the wealthy idlers of Silicon Valley.

He, like Kurzweil, used to preach The Singularity, a quasi-religious prophesy for when machine intelligence would surpass biological intelligence, leading to utopia.

Since then, his outlook on technology has soured — his current view is that AI machines will soon break out from data centres to exterminate us. Recently, in Time Magazine, he advocated the bombing of data centres to prevent this.

Yudkowsky had a strict religious upbringing. Probably, his religious training influenced his occultist convictions. For instance, Moloch, a satanic entity from the Hebrew Bible, has a leading role in his writing on AI, and his predictions about its potential.

Most revealingly, Yudkowsky is a science fiction fan. He often references Star Wars, Star Trek and Dune, weaving them into discussions and essays that are apparently serious. Comic book franchises, such as Batman, are also amongst his favoured references.

In a nutshell, Yudkowsky, like Kurzweil and Bostrom, is a quack. He has not learned to separate those fantastical worlds that he loves, from the ordinary one that he lives in.

Star Trek makes far more sense if it’s secretly a culturally translated story about wizards with sufficiently advanced magic. It was just reworded as “technology” so we muggles could relate.

— Elizier Yudkowsky

I could write something much longer about how insensate it is to make any predictions about the future, never mind ones drawn from fictional entertainments and religious paranoia. But there’s no need. As I said, it is often better to question the person than the argument. By ad hominem, you can rest assured that forecasts of an AI apocalypse are bogus. Eliezer Yudkowsky, Sam Altman (CEO of OpenAI) and Grimes (Elon Musk’s ex-partner) at a party.

Eliezer Yudkowsky, Sam Altman (CEO of OpenAI) and Grimes (Elon Musk’s ex-partner) at a party.

Bogus risk II: mass unemployment

More grounded in reality is the fear of mass unemployment, or at the very least, chaotic, unpredictable changes in the jobs market.

Now — it is understandable for people to fear the automation of their skills. I fear this too. But these predictions are also unlikely to come true.

At the moment, in most developed nations, we are experiencing the opposite of mass unemployment. Central banks are hiking up interest rates because there are too many jobs, which is a euphemism for not enough people. In other words, companies are trying to hire people, but can’t find enough of them. Hence rising inflation, rising wages, and a full-employment economy. In the last year, automation stocks have outperformed the Nasdaq. Find out more at Sharestep

In the last year, automation stocks have outperformed the Nasdaq. Find out more at Sharestep

For the foreseeable future this is not going to change. While birth-rates are falling, people are living longer in retirement, which drives up what economists call the dependency ratio (the number of people of all ages who don’t work, divided by the number of people who do). Meanwhile, we still need human hands for essential tasks such as restocking supermarket shelves or picking farmed fruit.

But what about the laptop professions? Aren’t they at risk? Not really. At the time of writing, we have not managed to teach computers to browse and interact with websites competently. There is no AI currently that can solve even trivial tasks, like discovering profiles on LinkedIn, or manipulating a graphical operating system. Whatever the online influencers have told you, ChatGPT cannot do any of this.

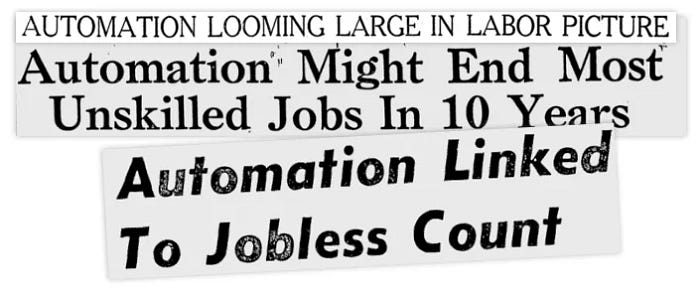

The business world has been predicting huge layoffs via automation for decades now. And although they might not consciously be aware of it, there has always been an element of wishful thinking at play. Because really, which CEO, at some level, doesn’t dream of a fleet of well-behaved, wageless machines to cut down his operating costs? But these hopes have never played out in practice. Headlines from the 1960s about job automation. Courtesy of Louis Anslow

Headlines from the 1960s about job automation. Courtesy of Louis Anslow

Bogus risk III: mind-control and propaganda

The most realistic concern surrounding AI, is that large amounts of sophisticated, politically motivated messaging could make its way onto the internet. It could be motivated by national politics or geopolitics, and put social cohesion and stability at risk.

Now — if companies like Google, Microsoft, Twitter and Meta become the gatekeepers of artificial intelligence, such scenarios are plausible. After all, those companies already control the distribution channels — give them control of production too, at industrial scale, and they have everything they need to cause problems.

However, the devil is in the details, and in the end, this scenario is unlikely to unfold.

Distributors of online media have experienced extraordinary pushback and challenges in recent years. Lawmakers in the U.S. and the European Union are becoming bolder in demanding restrictions on data sharing, and are asking for more transparency in the activities of media companies.

Meanwhile, millions of people have become acutely suspicious of social media platforms, and are questioning the value of them. Under these circumstances, with such levels of distrust, it is unlikely that any media company will be inclined to execute nefarious AI plans, at least in the near term.

It would certainly help to mitigate this risk, if we denied these companies privileged status with AI. We should not grant them regulatory moats and privileges. But in any case, while the risk is there, on the balance of probabilities it is unlikely. People have been questioning the value of social media. Courtesy of cartoonmovement.com

People have been questioning the value of social media. Courtesy of cartoonmovement.com

Where to next?

So if not these, what are the real risks of AI?

This article turned out much longer than I intended. I also wanted to look at less dramatic but more probable risks I see in AI that you have probably not read about elsewhere.

But I’ll have to write that up as a separate piece next week. If you’re interested in reading it, follow me on Medium so you get notified when it’s published.

If you enjoyed this article, pay us a visit at Sharestep. Sharestep lets you translate insights about the world into concrete investment opportunities.

For example, you can query the Sharestep platform with prompts like “existential risk” or “automation of jobs” and get instant investment analysis.

Best of all, it’s free to join!

As always, thanks for reading, and see you next time!