Navigating Murky Waters : How to Find Bad Actors and Moderate Reports Correctly with Fairness.

How to Find Bad Actors and Moderate Reports Correctly with Fairness.

As online platforms and digital communities continue to grow, the challenge of identifying and moderating bad actors has become increasingly complex.

These platforms which is a purposefully created for different purposes are used as breeding ground for Manipulation.

Whether it's combating Spammers (Murky waters as I call them ) with Multiple Accounts ,Scam Airdrops, Cope and Paste (Copyright Infringement), harassment, or other forms of abuse. Platform owners and Moderators must navigate a delicate balance between preserving Free expression and Maintaining a safe, inclusive environment.

Achieving this balance requires a multifaceted approach that combines technological solutions with nuanced human judgment.

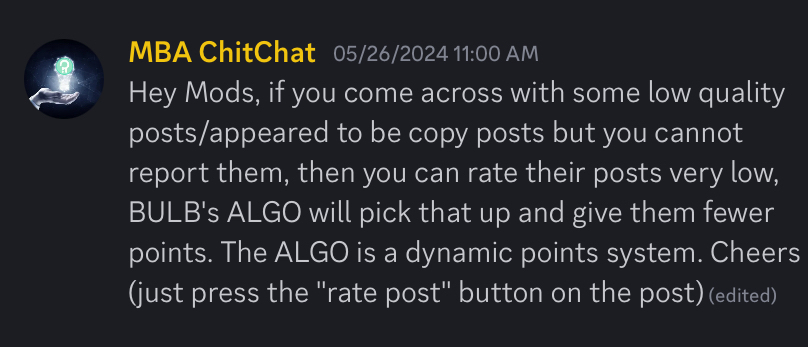

Inspired by @MBA ChitChat I am writing this Educational Article on some key strategies for finding bad actors and moderating reports correctly especially and most importantly with fairness:

1. Develop Clear Community Standards.

The foundation for effective moderation begins with establishing clear, transparent community standards. These guidelines should outline the platform's values, acceptable and unacceptable behaviors, and the consequences for violations. By setting these expectations upfront, you provide a framework for users to understand what is and isn't permitted.

In BULBAPP under the Acceptance of terms, it is stated that the Content should follow the law and you are responsible for your contents.

Read BulbApp Code of Conduct :

https://cdn.bulbapp.io/assets/documents/code_of_conduct.pdf

When crafting your community standards, be sure to solicit input from diverse stakeholders, including your user base, subject matter experts, and civil liberties organizations. This collaborative approach can help ensure your policies are grounded in principles of fairness and respect for human rights.

2. Leverage Automated Detection Tools

While human moderation will always be essential, technology can play a valuable role in surfacing potential issues at scale. Natural language processing, computer vision, and other AI-powered tools can be trained to detect patterns of abuse, hate speech, coordinated disinformation

campaigns, and other problematic content.

BULB’s ALGO picks up reports and low ratings of posts , This is an example of the Detection tool, It works together with Human Moderators so as to make moderation as a whole very effective.

However, it's crucial to remember that these automated systems are not infallible. They can produce false positives, miss nuanced context, and perpetuate societal biases. As such, any content flagged by algorithms should be reviewed by trained human moderators and Vice Versa before taking action.

3. Empower User Reporting

In addition to proactive detection, user-generated reports can be a powerful source of information about bad actors and harmful content. By making it easy for users to flag issues, you can crowdsource moderation efforts and gain valuable insights into the community's experiences. When designing your reporting systems, consider the following best practices:

- Provide clear, intuitive reporting mechanisms that cover a range of potential violations. - Offer multiple reporting options, such as in-app forms, email, and online submission portals.

- Ensure reports are reviewed and acted upon in a timely manner.

- Communicate with users about the status of their reports and the outcomes of investigations.

- Protect the privacy and safety of users who submit reports, especially in cases of sensitive or escalated issues.

4. Leverage Human Moderators with Diverse Expertise.

While technology can streamline the moderation process, human judgment remains essential for navigating the complex, ever-evolving landscape of online harms. Invest in building a team of skilled, diverse moderators who can bring varied perspectives and domain expertise to the table.

Some key considerations for assembling an effective moderation team:

- Seek out moderators with backgrounds in fields like digital ethics, human rights, psychology, and social justice.

- Ensure moderators receive comprehensive training on your community standards, decision-making frameworks, and trauma-informed practices. Using BULB as a reference again, To officially become a moderator, you must fulfill some tasks to check your commitment and accuracy in moderating.

- Implement systems for peer review, knowledge sharing, and continuous learning to foster consistent, well-reasoned decision-making.

- Provide adequate support and resources to help moderators manage the emotional toll of their work.

5. Establish Core Principles

Once you've identified problematic actors or content, it's crucial to have a clear, principles process for recruiting Moderators . Integrity ,Independence and Respect are the Principles of Moderation in BulbApp, This Principles will definitely define effective Moderation.

CONCLUSION

Finally, remember that moderation is an ongoing process that requires constant evaluation and improvement. Monitor the effectiveness of your strategies, gather feedback from users and experts, and be willing to adapt your approaches as new challenges and best practices emerge.

This might involve conducting regular audits of your moderation system, analyzing data on the types of reports and enforcement actions, and soliciting input from diverse stakeholders. The goal is to continuously refine your processes to ensure they remain fair, equitable, and effective in the face of a rapidly evolving digital landscape.

Effective moderation is not a one-size-fits-all solution – it requires a nuanced, multifaceted approach that balances technological capabilities, human judgment, and community engagement. By staying committed to the principles of fairness, transparency, and user empowerment is, platform owners and moderators can play a vital role in creating safer, more inclusive digital spaces for all.

![[LIVE] Engage2Earn: Veterans Affairs Labor repairs](https://cdn.bulbapp.io/frontend/images/1cbacfad-83d7-45aa-8b66-bde121dd44af/1)