The role of computational science in digital twins

The National Academies of Sciences, Engineering, and Medicine (NASEM) recently published a report on the Foundational Research Gaps and Future Directions for Digital Twins1. Driven by broad federal interest in digital twins, the authoring committee explored digital twin definitions, use cases, and needed mathematical, statistical, and computational research. This Comment highlights the report’s main messages, with a focus on those aspects relevant to the intersection of computational science and digital twins.

Digital twin definition and elements

The NASEM report1 proposes the following definition of a digital twin, modified from a definition published by the American Institute of Aeronautics and Astronautics2:

“A digital twin is a set of virtual information constructs that mimics the structure, context, and behavior of a natural, engineered, or social system (or system-of-systems), is dynamically updated with data from its physical twin, has a predictive capability, and informs decisions that realize value. The bidirectional interaction between the virtual and the physical is central to the digital twin.”

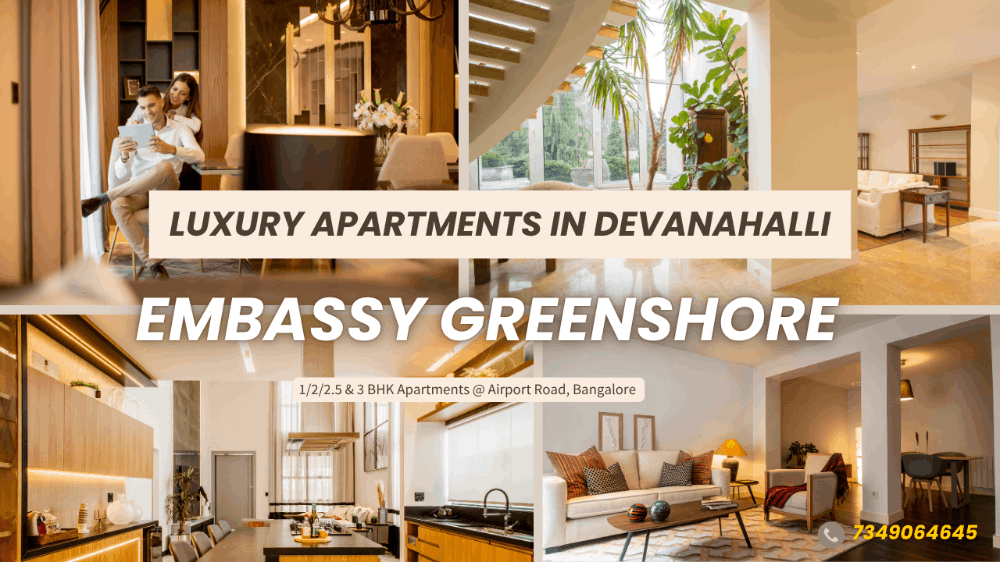

The refined definition refers to “a natural, engineered, or social system (or system-of-systems)” to describe digital twins of physical systems in the broadest sense possible, including the engineered world, natural phenomena, biological entities, and social systems. The definition introduces the phrase “predictive capability” to emphasize that a digital twin must be able to issue predictions beyond the available data to drive decisions that realize value. Finally, the definition highlights the bidirectional interaction that comprises feedback flows of information from the physical system to the virtual representation to update the latter, and from the virtual back to the physical system to enable decision making, either automatic or with humans in the loop (Fig. 1). The notion of a digital twin goes beyond simulation to include tighter integration between models, data, and decisions.

Fig. 1: Elements of the digital twin ecosystem.

figure 1

Information flows bidirectionally between the virtual representation and physical counterpart. These information flows may be through automated processes, human-driven processes, or a combination of the two. Adapted with permission from ref. 1, The National Academies Press.

The bidirectional interaction forms a feedback loop that comprises dynamic data-driven model updating (for instance, sensor fusion, inversion, data assimilation) and optimal decision making (for instance, control and sensor steering). The dynamic, bidirectional interaction tailors the digital twin to a particular physical counterpart and supports the evolution of the virtual representation as the physical counterpart changes or is better characterized. Data from the physical counterpart are used to update the virtual models, and the virtual models are used to drive changes in the physical system. This feedback loop may occur in real time, such as for dynamic control of an autonomous vehicle or a wind farm, or it may occur on slower time scales, such as post-imaging updating of a digital twin and subsequent treatment planning for a cancer patient. The digital twin may provide decision support to a human, or decision making may be shared jointly between the digital twin and a human as a human–agent team. Human–digital twin interactions may rely on a human to design, manage, and/or operate elements of the digital twin, such as selecting sensors and data sources, managing the models underlying the virtual representation, and implementing algorithms and analytics tools.

An important theme that runs throughout the report is the notion that the digital twin be “fit for purpose,” meaning that model types, fidelity, resolution, parameterization, frequency of updates, and quantities of interest be chosen, and in many cases dynamically adapted, to fit the particular decision task and computational constraints at hand. Implicit in tailoring a digital twin to a task is the notion that an exact replica of a physical asset is not always necessary or desirable. Instead, digital twins should support complex tradeoffs of risk, performance, computation time, and cost in decision making. An additional consideration is the complementary role of models and data — a digital twin is distinguished from traditional modeling and simulation in the way that models and data work together to drive decision making. In cases in which an abundance of data exists and the decisions fall largely within the realm of conditions represented by the data, a data-centric view of a digital twin is appropriate. In cases that are data-poor and call upon the digital twin to issue predictions in extrapolatory regimes that go well beyond the available data, a model-centric view of a digital twin is appropriate — a mathematical model and its associated numerical model form the core of the digital twin, and data are assimilated through these models.

Computational science challenges and opportunities

Substantial foundational mathematical, statistical, and computational research is needed to bridge the gap between the current state of the art and aspirational digital twins.

A fundamental challenge for digital twins is the vast range of spatial and temporal scales that the virtual representation may need to address. In many applications, the scale at which computations are feasible falls short in resolving key phenomena and does not achieve the fidelity needed to support decisions. Different applications of digital twins drive different requirements for modeling fidelity, data, precision, accuracy, visualization, and time-to-solution, yet many of the potential uses of digital twins are currently intractable with existing computational resources. Investments in both computing resources and mathematical/algorithmic advances are necessary elements for closing the gap between what can be simulated and what is needed to achieve trustworthy digital twins. Particular areas of importance include multiscale modeling, hybrid modeling, and surrogate modeling. Hybrid modeling entails a combination of empirical and mechanistic modeling approaches that leverage the best of both data-driven and model-driven formulations. Combining data-driven models with mechanistic models requires effective coupling techniques to facilitate the flow of information while understanding the inherent constraints and assumptions of each model. More generally, models of different fidelity may be employed across various subsystems, assumptions may need to be reconciled, and multimodal data from different sources must be synchronized. Overall, simulations for digital twins will likely require a federation of individual simulations rather than a single, monolithic software system, necessitating their integration for a full digital twin ecosystem. Aggregating risk measures and quantifying uncertainty across multiple, dynamic systems is nontrivial and requires the scaling of existing methods.

Physical counterpart

Digital twins rely on the real-time (or near real-time) processing of accurate and reliable data that is often heterogeneous, large-scale, and multiresolution. While significant literature has been devoted to best practices around gathering and preparing data for use, several important opportunities merit further exploration. Handling outlier or anomalous data is critical to data quality assurance; robust methods are needed to identify and ignore spurious outliers while accurately representing salient rare events. On the other hand, constraints on resources, time, and accessibility may hinder gathering data at the frequency or resolution needed to adequately capture system dynamics. This under-sampling, particularly in complex systems with large spatiotemporal variability, could lead to overlooking critical events or significant features. Innovative sampling approaches should be used to optimize data collection. Artificial intelligence (AI) and machine learning (ML) methods that focus on maximizing average-case performance may yield large errors on scarce events, so new loss functions and performance metrics are needed. Improvements in sensor integrity, performance and reliability, as well as the ability to detect and mitigate adversarial attacks, are crucial in advancing the trustworthiness of digital twins. To address the vast amounts of data, such as large-scale streaming data, needed for digital twins in certain applications, data assimilation methods that leverage optimized ML models, architectures, and computational frameworks must be developed.

Ethics, privacy, data governance, and security

Digital twins in certain settings may rely on identifiable (or re-identifiable) data, while others may contain proprietary or sensitive information. Protecting individual privacy requires proactive consideration within each element of the digital twin ecosystem. In sensitive or high-risk settings, digital twins necessitate heightened levels of security, particularly around the transmission of information between the physical and virtual counterparts. In some cases, an automated controller may issue commands directly to the physical counterpart based on results from the virtual counterpart; securing these communications from interference is paramount.

Physical-to-virtual feedback flow

Inverse problem methodologies and data assimilation are required to combine physical observations and virtual models. Digital twins require calibration and updating on actionable time scales, which highlights foundational gaps in inverse problem and data assimilation theory, methodology, and computational approaches. ML and AI could have large roles to play in addressing these challenges, such as through online learning techniques for continuously updating models using streaming data. Additionally, in settings where data are limited, approaches such as active learning and reinforcement learning can help guide the collection of additional data most salient to the digital twin’s objectives.

Virtual-to-physical feedback flow

The digital twin may drive changes in the physical counterpart itself (for instance, through control) or in the observational systems associated with the physical counterpart (for instance, through sensor steering), through an automatic controller or a human. Mathematically and statistically sophisticated formulations exist for optimal experimental design (OED), but few approaches scale to the kinds of high-dimensional problems anticipated for digital twins. In the context of digital twins, OED must be tightly integrated with data assimilation and control or decision-support tasks to optimally design and steer data collection. Real-time digital twin computations may require edge computing under constraints on computational precision, power consumption, and communication. ML models that can be executed rapidly are well-suited to meet these requirements, but their black-box nature is a barrier to establishing trust. Additional work is needed to develop trusted ML and surrogate models that perform well under the computational and temporal conditions required. Dynamic adaptation needs for digital twins may benefit from reinforcement learning approaches, but there is a gap between theoretical performance guarantees and efficacious methods in practical domains.

Verification, validation, and uncertainty quantification (VVUQ)

VVUQ must play a role in all elements of the digital twin ecosystem and is critical to the responsible development, use, and sustainability of digital twins. Evolution of the physical counterpart in real-world use conditions, changes in data collection, noisiness of data, changes in the distribution of the data shared with the virtual twin, changes in the prediction and/or decision tasks posed to the digital twin, and updates to the digital twin virtual models all have consequences for VVUQ. Verification and validation help build trustworthiness in the virtual representation, while uncertainty quantification informs the quality of its predictions. Novel challenges of VVUQ for digital twins arise from model discrepancies, unresolved scales, surrogate modeling, AI, hybrid modeling, and the need to issue predictions in extrapolatory regimes. However, digital twin VVUQ must also address the uncertainties associated with the physical counterpart, including changes to sensors or data collection equipment, and the evolution of the physical counterpart. Applications that require real-time updating also require continual VVUQ, and this is not yet computationally feasible. VVUQ also plays a role in understanding the impact of mechanisms used to pass information between the physical and virtual. These include challenges arising from parameter uncertainty and ill-posed or indeterminate inverse problems, as well as uncertainty introduced by the inclusion of the human-in-the-loop.

Conclusions

Digital twins are emerging as enablers for significant, sustainable progress across multiple domains of science, engineering, and medicine. However, realizing these benefits requires a sustained and holistic commitment to an integrated research agenda that addresses foundational challenges across mathematics, statistics, and computing. Within the virtual representation, advancing the models themselves is necessarily domain specific, but advancing the hybrid modeling and surrogate modeling embodies shared challenges that crosscut domains. Similarly, many of the physical counterpart challenges around sensor technologies and data are domain specific, but issues around fusing multimodal data, data interoperability, and advancing data curation practices embody shared challenges that crosscut domains. When it comes to the bidirectional flows, dedicated efforts are needed to advance data assimilation, inverse methods, control, and sensor-steering methodologies that are applicable across domains, while at the same time recognizing the domain-specific nature of decision making. Finally, there is substantial opportunity to develop innovative digital twin VVUQ methods that translate across domains. To get more details on research directions for the computational sciences in digital twins, we refer the reader to the full 2023 report from NASEM1.