Let’s talk about the AIGC and Web3

AIGC is so powerful, will it become a content productivity tool in the Web 3.0 era? Let’s first take a brief look at the changes in the content production model from the Web 1.0 era to the Web 3.0 era.

The Web 1.0 era was mainly a "read-only" mode of single-item information delivery. The media forms are mainly portal websites such as Medium, Twitter X and Google. Certain specific groups or companies publish information one-way to the Internet and feed it to users for browsing and reading. In this process, users can only passively receive undifferentiated information published by the website, but cannot upload their own feedback or communicate with other people online in real time.

With Web 2.0, people communicate with each other through the Internet, and there are more and more interactions. What followed was a change in the content production model, from a single PGC (professionally generated content) to a combination of PGC+UGC (user-generated content). Until now, UGC has occupied the main market.

As we enter the Web 3.0 era, artificial intelligence, data, and semantic network construction are forming new links between people and the Internet, and content consumption is growing rapidly. By then, PGC and UGC will be unable to meet the rapidly expanding content demand, and AIGC will become a productivity tool in the Web3 era. The generation of AIGC uses artificial intelligence knowledge graphs to provide assistance to humans in content creation or to generate it completely independently. It can not only improve the efficiency of content generation, but also expand the diversity of content. Perhaps in the Web 3.0 era, text generation, picture rendering, and video content are all completed by AIGC. Even entertainment-oriented music creation and game content generation AIGC can be competent.

AIGC technical principles

AIGC is about to become the main content producer of Web3.0, so what determines the quality of AIGC's output?

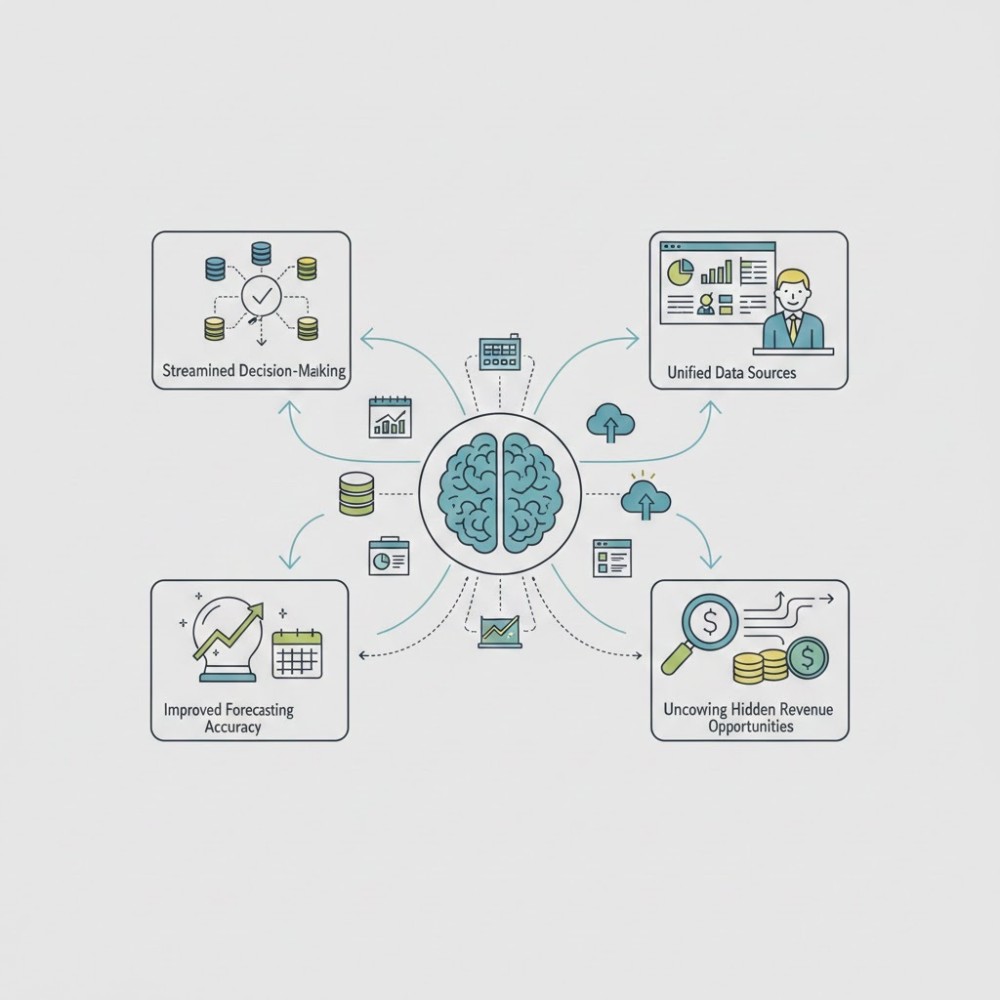

The output quality of AIGC mainly has three core elements: data, algorithm and computing power.

- Data: Massive high-quality application scenario data is the key basis for the accuracy of training algorithms. Data includes voice, text, images, etc.

- Algorithms: Algorithms such as neural networks and deep learning are effective methods for mining data intelligence. Different from traditional machine deep machine learning algorithms, the iteration of neural network on learning paradigm + network structure improves the learning ability of AI algorithms.

- Computing power: Computers, chips and other carriers provide basic computing power for AIGC. Computing power is infrastructure, and AI algorithm models have huge demand for computing power.

AI's powerful creative ability, in addition to the support of massive data and hardware computing power, is inseparable from the development of two core technologies, NLP (Natural Language Processing) and algorithm models.

Natural Language Processing NLP

Natural Language Processing (abbreviated as NLP) is a branch of the field of artificial intelligence and linguistics. It mainly discusses how to process and use natural language. Natural language processing includes many aspects and steps, including cognition, understanding, generation, etc. part.

Natural language cognition and understanding is to let the computer turn the input language into interesting symbols and relationships, and then process it according to the purpose. The natural language generation system converts computer data into natural language. To put it bluntly, it actually means that when humans and machines interact, both parties can “understand” it.

Natural language processing has two core tasks, natural language understanding (NLU) and natural language generation (NLG).

Natural language understanding NLU

Natural language understanding is a technology that studies how to let computers understand human language. It is the most difficult one among natural language processing technologies. Natural language understanding hopes that machines will be able to understand language like humans, just like humans.

Due to the diversity, ambiguity, knowledge dependence and context of natural language, computers have many difficulties in understanding it, so NLU is still far inferior to human performance.

Natural language generation NLG

A natural language generation system can be said to be a translator that converts data into natural language expressions. However, the method of producing the final language is different from that of a compiled program because of the diverse expressions of natural languages.

Natural language generation can be regarded as the reverse of natural language understanding: the natural language understanding system needs to clarify the meaning of the input sentence to generate machine representation language; the natural language generation system needs to decide how to convert concepts into language. The typical six steps of natural language generation are:

Decide what to include: Decide what information to include in the text. Using the pollen forecast software in the previous section as an example, should we explicitly mention that the pollen level in the southeast is 7?

Architecture document: The overall organization of the information conveyed. For example, it was decided to describe areas with high pollen counts first and then mention areas with low pollen counts.

Cluster sentences: Combine similar sentences to make the text more readable and natural. For example, combining the next two sentences "Friday's pollen level has gone from mid-level yesterday to today's high" and "The pollen level in most parts of the country is between 6 and 7" becomes "Friday's pollen level has gone from yesterday's mid-range to today's high, nationwide. Most areas have values between 6 and 7."

Choose words: Choose words that express the concept. For example, decide whether to use "moderate" or "intermediate."

Referential language generation: Generate referential language that can identify objects or areas. For example, using "the northern islands and the northeastern corner of Scotland" refers to a certain area in Scotland. This task also includes deciding on pronouns and other anaphors.

Realize text: generate actual text according to the rules of syntax, word formation, and orthography.

Algorithm model

In recent years, the rapid development of AIGC is mainly attributed to the accumulation of technology in the algorithm field, including: Generative Adversarial Model (GAN), Variable Differential Autoencoder (VAE), Normalized Flow Model (NFs), Autoregressive Model (AR), Energy Model and Diffusion Model. Among them, the generative adversarial model and the diffusion model are two very commonly used models.

The diffusion model is a new type of generative model that can generate a variety of high-resolution images. Diffusion models can be applied to various tasks such as image denoising, image restoration, super-resolution imaging, image generation, and more.

AIGC application scenarios and development trends

With the development of AIGC technology, its scope of application will gradually expand. AIGC has now been widely used in scenarios such as text, images, audio, games and code generation.

- Text creation: AIGC is mainly used in news writing, script writing, etc.

- Picture creation: There are already many AI drawing applications on the market. Users only need to enter a text description, and the computer will automatically generate a work.

- Video creation: Google launched Phenaki, an AI video generation model that can generate videos based on text content. There are many related text-generated video products on the market now.

- Audio creation: "AI Stefanie Sun" has become popular. Although there has not yet been any demonstration related to creation, we can already see the application of AIGC in audio creation.

- Game development: Currently, some game companies have applied AI-related technologies to NPCs, scene modeling, original painting, etc.

At present, AIGC mainly assists people in content production. I believe that with the development of technology, AIGC will be involved in more content production and gradually occupy a larger proportion in the process of co-creation with humans. Even in the future, AIGC may subvert the existing content production model and complete content creation independently, bringing more content productivity to the Web 3.0 era.