Ethical Implications of Artificial Intelligence in Decision-Making Processes

INTRODUCTION:

Artificial Intelligence (AI) has revolutionized decision-making processes across various domains, from healthcare and finance to transportation and criminal justice. While AI offers numerous benefits such as efficiency, accuracy, and automation, it also raises significant ethical concerns. This article aims to explore the ethical implications of AI in decision-making processes, highlighting both the opportunities and challenges it presents.

1. Bias and Fairness:

AI systems, particularly those utilizing machine learning algorithms, learn from historical data. If this data contains biases, such as racial or gender discrimination, the AI can inadvertently perpetuate these biases in its decision-making processes. For example, in hiring or loan approval systems, biased algorithms may unfairly disadvantage certain demographic groups. Addressing bias requires not only identifying and mitigating biases in the training data but also continuously monitoring and evaluating AI systems for fairness throughout their lifecycle. Additionally, it's essential to incorporate diverse perspectives and interdisciplinary approaches into AI development to mitigate bias from the outset.

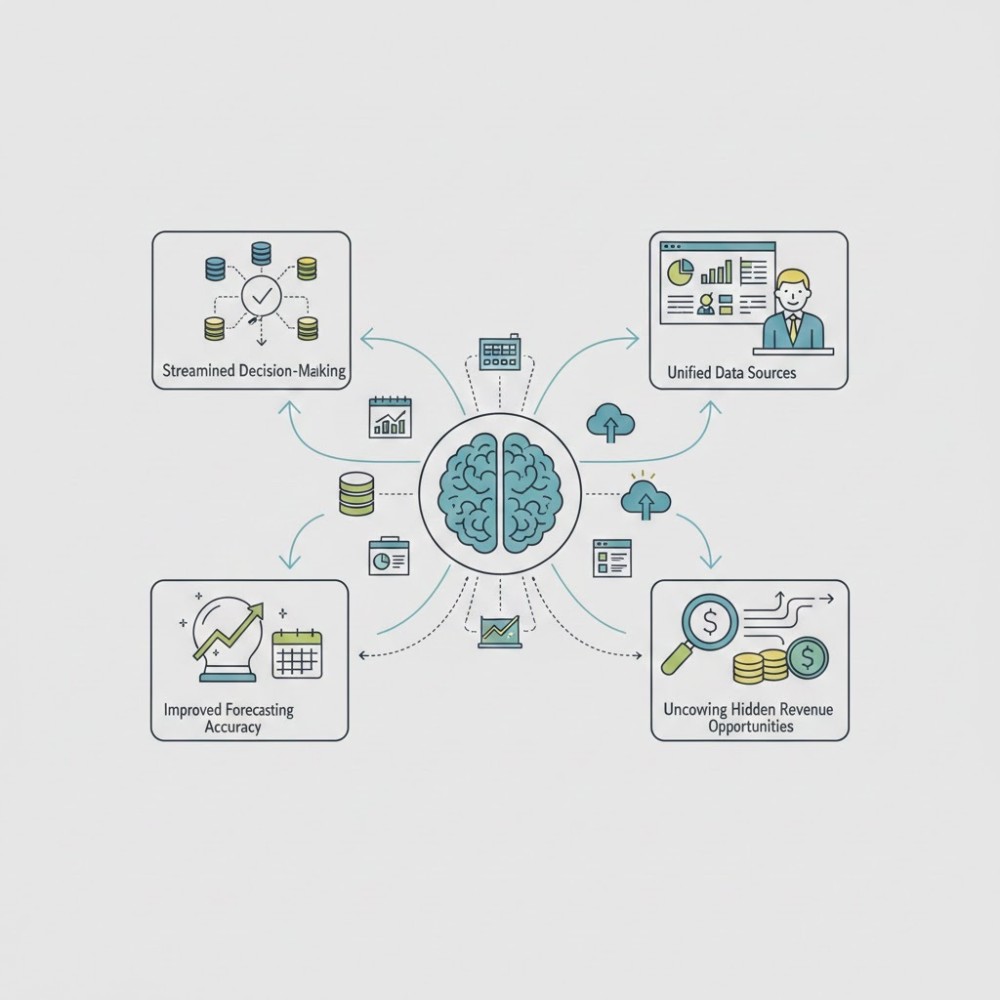

2. Accountability and Transparency:

The lack of transparency in AI algorithms can hinder accountability, making it difficult to trace how decisions are made or to hold responsible parties accountable for errors or unethical behavior. This opacity raises concerns about decision-making processes being influenced by undisclosed factors or biases. Enhancing accountability and transparency involves making AI systems more explainable and interpretable, enabling stakeholders to understand how decisions are reached. This may involve providing explanations or justifications for AI-generated decisions and implementing mechanisms for auditing and oversight.

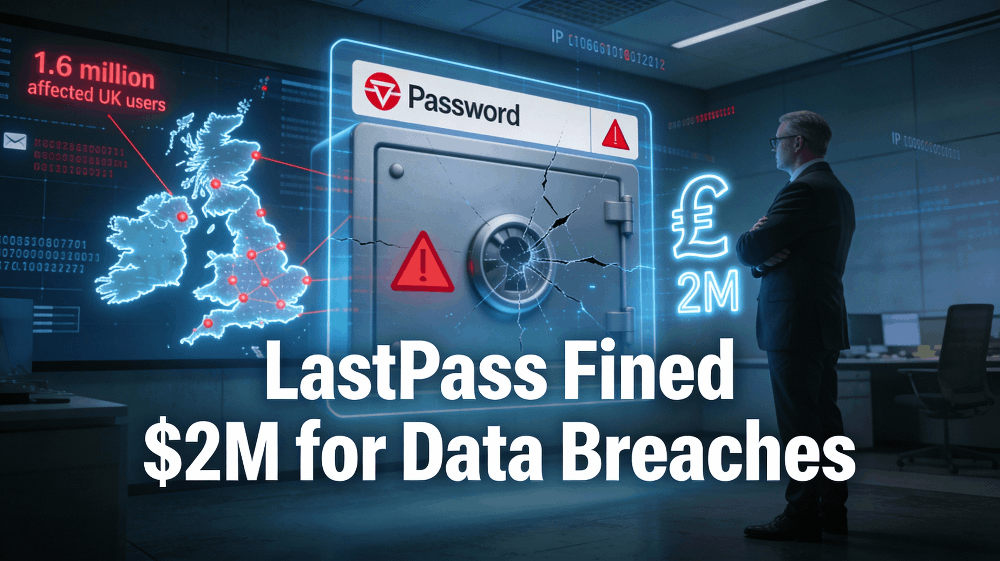

3. Privacy and Consent:

AI systems often rely on vast amounts of personal data to function effectively, raising concerns about privacy and consent. Individuals may not always be aware of how their data is being used or may not have given explicit consent for its use in AI-driven decision-making processes. Protecting privacy requires robust data protection measures, such as encryption and anonymization, as well as clear policies governing data collection, storage, and usage. Moreover, organizations must ensure that individuals have meaningful control over their data and are informed about how it is being utilized by AI systems.

4. Job Displacement and Economic Inequality:

The widespread adoption of AI and automation technologies has the potential to disrupt labor markets, leading to job displacement and exacerbating economic inequality. While AI can create new job opportunities, it may also render certain tasks or roles obsolete, particularly those that are routine or repetitive. To address these challenges, policymakers, businesses, and educators must collaborate to develop strategies for reskilling and upskilling workers whose jobs are at risk of automation. Additionally, efforts to promote inclusive growth and equitable distribution of the benefits of AI technology are essential to mitigate the widening socioeconomic disparities.

CONCLUSION:

In navigating the ethical terrain of AI in decision-making, it's imperative to strike a balance between harnessing the benefits of AI technology and mitigating its potential harms. This requires a multidisciplinary approach that considers not only technical aspects but also ethical, social, and legal implications. By prioritizing transparency, fairness, accountability, privacy, and equity, we can ensure that AI serves the common good and contributes to a more just and equitable society.

REFERENCES:

1. Hardt, M., Price, E., & Srebro, N. (2016). Equality of Opportunity in Supervised Learning. Advances in Neural Information Processing Systems, 29.

2. Dwork, C., Hardt, M., Pitassi, T., Reingold, O., & Zemel, R. (2012). Fairness Through Awareness. Proceedings of the 3rd Innovations in Theoretical Computer Science Conference.

3. Caliskan, A., Bryson, J. J., & Narayanan, A. (2017). Semantics Derived Automatically from Language Corpora Contain Human-Like Biases. Science, 356(6334), 183-186.

4. Lipton, Z. C. (2016). The Mythos of Model Interpretability. Proceedings of the 2016 ICML Workshop on Human Interpretability in Machine Learning (WHI 2016).

5. Pasquale, F. (2015). The Black Box Society: The Secret Algorithms That Control Money and Information. Harvard University Press.