Web3 X AI: How does the decentralized model work?

Key points

It's hard to ignore the growing enthusiasm for artificial intelligence (AI) and the excitement about the potential combination of this technology and Web3. However, the current state of this nascent combination shows that the existing blockchain framework is still inadequate to the infrastructure needs of AI.

In this series, we will learn about the relationship between AI and Web3 as well as the challenges, opportunities, and vertical applications in the Web3 sector.

The first part of this series delves into the evolution of Web3 infrastructure for AI, current challenges in computational requirements, and opportunities.

Artificial intelligence (AI) and blockchain technology are two of the most advanced technologies that have captured the public imagination over the past decade. The development of AI in Web2 is evident as the number of investments that venture capital funds make is increasing this year. From Inflection AI's $1.3 billion funding round in June 2023 with investments from Microsoft and Nvidia to OpenAI competitor Anthropic raising $1.25 billion from Amazon in September 2023.

However, the uses and interactions of Web3 are still skeptical. Will Web3 play a role in the development of AI? If yes, why do we need blockchain in AI and how? One story we are seeing is that Web3 has the potential to radically transform productivity relationships, while AI has the potential to transform productivity itself. However, bringing these technologies together proves complex, revealing challenges and opportunities for infrastructure requirements.

AI Infrastructure and the GPU Crisis

The main bottleneck we currently see in AI is the GPU issue. Large language models (LLMs) like OpenAI's GPT-3.5 ushered in the first cutting-edge application we see today, ChatGPT. This is the fastest app to reach 100 million MAU within 6 weeks compared to YouTube and Facebook, which took 4 years. From here leads to the birth of countless new applications exploiting the LLM model. Some examples include Midjourney, which is built on Stable Diffusion's StableLM, and PaLM2, which is behind Google's Bard, API, MakerSuite, and Workspaces.

Deep learning - Deep learning is a lengthy process and requires a lot of computing power at scale - the more parameters an LLM has, the more GPU memory it requires to operate. Each parameter in the model is stored in GPU memory, and the model needs to load these parameters into memory during inference. If the model size exceeds the available GPU memory, the ML model will stop working. Leading companies like OpenAI are also experiencing GPU shortages, leading to difficulties in implementing multimodal models with models with longer sequence lengths (8k vs. 32k). With significant chip supply shortages, large-scale applications have reached the threshold of what is possible with LLM, leaving AI startups to compete on GPU capacity for a first-mover advantage.

Centralized and decentralized approaches

Looking ahead, centralized solutions like Nvidia's tensorRT-LLM release in August 2023, delivering better inference and higher performance, are also expected to launch Nvidia H200 in Q2 2024 is expected to address GPU limitations. Furthermore, traditional cryptocurrency mining companies such as CoreWeave and Lambda Labs are aiming to provide GPU-centric cloud computing services based on rental fees ranging from 2 to 2.25 USD/hour for with Nvidia H100. Cryptocurrency mining companies use ASICs because they offer significant advantages over general-purpose computers or GPUs for mining efficiency due to their specific design. Dedicated algorithm and hardware architecture to increase hashing power.

On the Web3 side, the idea of an Airbnb-like GPU marketplace is already a popular concept, and there are currently several projects trying to do this. Incentives in blockchain are suitable for developing the network with own resources and are an effective mechanism to attract participants or organizations with idle GPUs in a decentralized manner. Typically, accessing GPUs requires signing long-term contracts with cloud service providers, and applications may not necessarily use GPUs for the duration of the contract.

Another approach called Petals involves dividing the LLM model into multiple layers hosted on different servers similar to the sharding concept. As part of the BigScience collaboration, Petals was developed by engineers and researchers from Hugging Face, the University of Washington and Yandex, among others. Any user can connect to the network in a decentralized way as a client and apply this model to their data

AI X Web3 infrastructure applications

Decentralized AI computer network

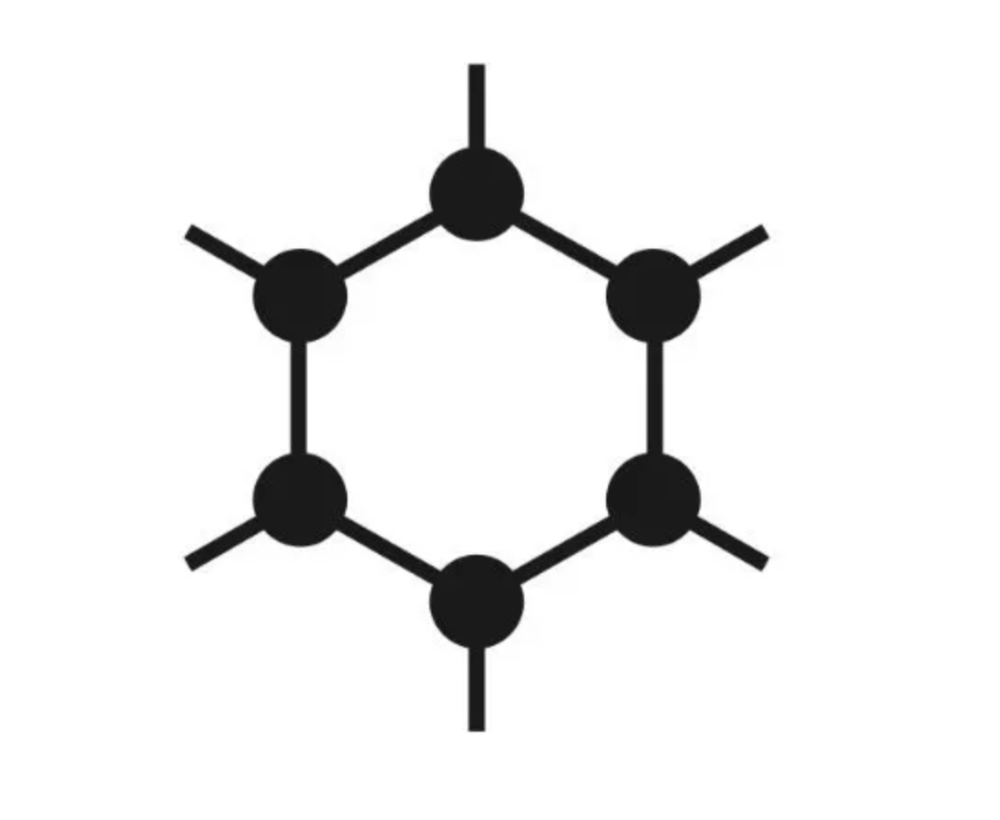

Decentralized computer networks link individuals in need of computing resources with systems that possess unused computing power. This model, in which individuals and organizations can contribute their idle resources to the network without incurring additional costs, allows the network to offer more affordable prices than the providers concentrate. There are many possibilities for decentralized GPU rendering supported by blockchain-based peer-to-peer networks to scale AI-powered 3D content creation in the Web3 gaming sector. However, a significant drawback to decentralized computing networks lies in the possibility of slowdowns in machine learning training due to communication overhead between different computing devices.

Decentralized AI data.

Training data serves as the initial data set used to teach machine learning applications to recognize patterns or meet a specific criterion. While testing or validation data is used to evaluate the accuracy of the model and a separate dataset is needed for validation as the model is familiar with the training data. There are currently many efforts to create a market for AI data sources and AI data labeling, with blockchain serving as an incentive layer for large companies and organizations to improve efficiency. However, at their current early stages of development, these verticals face obstacles such as the need for human review and concerns around blockchain-enabled data. For example, there are SP computational networks specifically designed to train ML models. SP computing networks are tailored to specific uses, often adopting an architecture that consolidates computing resources into a unified group, much like a supercomputer. The SP computing network determines costs through a gas mechanism or community-controlled parameters.