What is data cleaning in machine learning and how to perform it?

What is data cleaning in machine learning?

Data cleaning in machine learning (ML) is an indispensable process that significantly influences the accuracy and reliability of predictive models. It involves various techniques and methodologies aimed at improving data quality by identifying and rectifying corrupt or inaccurate records in a data set. It is often considered the first step in machine learning data preprocessing.

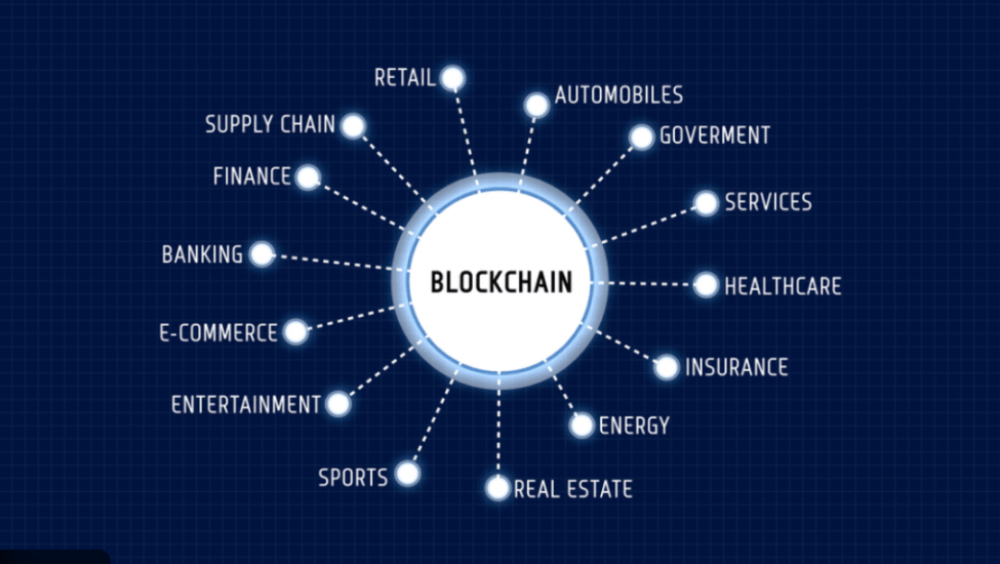

Inaccurate or poor-quality data can lead to misleading results with significant implications, especially in critical applications like healthcare, finance, autonomous vehicles and cryptocurrency markets. Ensuring high data quality through meticulous data cleaning is paramount for the success of AI applications, including those in the rapidly evolving and data-intensive fields of blockchain and digital currencies.

The importance of data quality in Web3

In the Web3 space, where decentralized technologies meet advanced analytics, the importance of data quality cannot be overstated. It hinges on factors such as accuracy, completeness, consistency and reliability, which are essential for ensuring that the data effectively represents the real-world scenarios it’s meant to model. This is particularly crucial in machine learning applications that are becoming increasingly integral to the Web3 ecosystem, from predictive algorithms in decentralized finance (DeFi) to enriching nonfungible token (NFT) marketplaces with in-depth data analysis.

For these applications, data must be meticulously curated to minimize missing values, ensure uniformity across various sources, and maintain a high level of reliability for critical decision-making. The process of data cleaning plays a vital role in this context, aiming to refine data quality and ensure that inputs into machine learning models are capable of yielding valid and trustworthy results. This approach aligns with the foundational principles of the Web3 paradigm, which emphasizes transparency, trust and verifiability in digital transactions and interactions.

Techniques for data cleaning

The below section explains essential techniques for refining datasets, ensuring data accuracy and consistency for effective analysis and decision-making.

Handling missing data in machine learning

Below are various techniques in ML for handling missing data:

Imputation

- Mean or median imputation: Substituting missing values with the mean or median of the available data, suitable for numerical data.

- Mode imputation: Using the most frequent value to fill in missing data, typically applied to categorical data.

- K-nearest neighbors (KNN) imputation: Estimating the missing values based on the K-nearest neighbors (a simple, non-parametric algorithm used for classification and regression tasks) found in the multidimensional space of the other variables.

- Regression imputation: Predicting missing values using linear regression or another predictive model based on the relationship between the missing data’s variable and other variables.

Deletion

- Listwise deletion: Removing entire records that contain any missing values — simple but may result in significant data loss.

- Pairwise deletion: Utilizing all available data by analyzing pairs of variables without discarding entire records. This method is useful in correlation or covariance calculations but can lead to inconsistencies.

Advertisement

BlockShow by Cointelegraph is back with a crypto festival in Hong Kong, May 8-9 - Secure Your Spot!

Substitution

- Hot deck imputation: Replacing missing values with observed responses from similar cases (donors) within the same data set.

- Cold deck imputation: Substituting missing data with values from external, similar data sets, typically used when a hot deck is not an option.

Data augmentation

- Multiple imputation: Creating multiple complete data sets by imputing missing values several times, followed by analysis of each data set and combining the results to account for the uncertainty of the missing data.

- Expectation-maximization algorithm: An iterative process that estimates missing values by maximizing the likelihood function, assuming the data is normally distributed.

Removing outliers in data sets

Outliers are data points that deviate significantly from the rest of the data, potentially skewing the results and leading to inaccurate models. Techniques to remove outliers include:

Z-score method

This method identifies outliers by measuring the number of standard deviations a data point is from the mean. Data points that fall beyond a certain threshold, typically set at 3 standard deviations, are considered outliers.

Interquartile range (IQR) method

This technique uses the IQR, which is the difference between the 25th and 75th percentiles of the data. Data points that fall outside 1.5 times the IQR above the 75th percentile and below the 25th percentile are considered outliers.

Modified Z-score method

Similar to the Z-score method, it uses the median and the median absolute deviation (MAD) for calculations, making it more robust to very extreme values.

Distance-based methods

In multidimensional data, methods like the Mahalanobis distance can identify outliers by considering the covariance among variables.

Density-based methods

Techniques such as the local outlier factor (LOF) assess the local density deviation of a given data point with respect to its neighbors, identifying outliers in dense areas.

Data normalization and cleaning and their relevance in Web3

Data normalization, a key step in data preprocessing, involves adjusting the scales of various variables to allow them to contribute equally to analytical models. This is crucial in environments where data from diverse sources and formats converge, as is often the case in decentralized applications (DApps) and smart contracts.

The Min-Max scaling technique is instrumental because it rescales data to a uniform range — commonly 0 to 1 — by adjusting based on the minimum and maximum values. This ensures that no single variable disproportionately influences the model due to scale differences. Similarly, Z-score normalization, or standardization, which recalibrates data to have a mean of 0 and a standard deviation of 1, is vital. This method is particularly advantageous for algorithms that rely on gradient descent, facilitating faster and more reliable convergence by harmonizing the scale across all variables.

These normalization techniques are not just mathematical conveniences; in the Web3 space, they underpin the reliability and efficiency of decentralized models, ensuring that data-driven insights and decisions are both accurate and equitable across the board.

Automated data cleaning tools

Automated data cleaning tools are indispensable in streamlining the data cleaning process, particularly for large data sets. These tools utilize algorithms to detect and correct errors, fill in missing values, and eliminate duplicates, drastically reducing the manual effort and time required for data cleaning. Among the notable tools are OpenRefine, known for its robust data cleaning and transformation capabilities, and Trifacta Wrangler, which is tailored for quick and precise cleaning of diverse data sets.

These tools facilitate the preparation of clean, accurate data sets for machine learning model development and underscore the importance of thorough data cleaning for reliable and precise machine learning outcomes. Further advancements in data cleaning involve exploring sophisticated techniques, integrating feature engineering and emphasizing data validation, all of which enhance data quality and ensure the integrity of machine learning models.

Advanced data cleaning techniques

Data scrubbing in ML

Data scrubbing, also known as data cleansing, goes beyond basic cleaning to include error correction and the resolution of inconsistencies within a data set. This process involves using algorithms and manual inspection to ensure data accuracy and uniformity. For example, regular expressions can automate the correction of format discrepancies in crypto data entries, such as wallet addresses and transaction IDs, ensuring a uniform data set conducive to analysis.

Noise reduction in machine learning

Noise in data, which includes irrelevant details, can mask important patterns, especially in Web3 and blockchain contexts. Reducing this noise is key to clarifying the data’s signal. Techniques like rolling averages help smooth out short-term fluctuations, emphasizing longer-term trends, which are crucial in blockchain’s immutable records.

Dimensionality reduction, such as Principal Component Analysis (PCA), also aids in noise reduction by narrowing down variables to those that capture most of the data’s variance, simplifying analysis in the complex Web3 space. PCA is a statistical technique used for dimensionality reduction and data visualization by transforming data into a new coordinate system to identify patterns and correlations.

Feature engineering and data cleaning

Feature engineering, pivotal in enhancing machine learning model performance, involves generating new features from existing data and is closely tied to data cleaning to ensure the integrity of transformations. This includes creating interaction features where combinations of variables may provide stronger predictive insights than individual variables, necessitating clean data for precise formulation.

Additionally, binning, or discretization, converts continuous data into categories. It can improve model efficacy, especially for algorithms adept with categorical inputs, requiring a thorough evaluation of data distribution to prevent bias.

Data transformation in ML

Data transformation, a vital aspect of data cleaning, entails modifying data into a more analyzable format or scale, incorporating techniques like normalization, aggregation and feature scaling. Log transformation, for instance, is applied to diminish skewness, thereby stabilizing variance and normalizing data — a beneficial trait for numerous machine learning algorithms. Aggregation mitigates noise and streamlines models by consolidating data, either by summing values over time or categorizing them.

The significance of data validation in machine learning within Web3

Data validation in machine learning plays a critical role in ensuring that data sets adhere to specific project criteria and affirming the effectiveness of prior cleaning and transformation efforts. It encompasses key practices such as schema validation, which involves comparing the data against a predetermined schema to verify the accuracy of its format, type and value range, thus identifying errors that basic cleaning processes might overlook.

In the context of Web3 and blockchain, where data integrity and trust are paramount, such validation processes are even more crucial. The immutable nature of blockchain makes it essential that data entered into the ledger is accurate and conforms to expected schemas from the outset.

Effective data cleaning, particularly with large data sets, demands scalable techniques and automated tools like Apache Hadoop and machine learning-powered cleaners for efficiency and reduced error. Continuous data cleaning and feature selection are vital for predictive modeling to maintain model accuracy and address data quality issues informed by model performance analysis.

Best practices in this space include continuous data monitoring, collaborative approaches and thorough documentation to ensure transparency and reproducibility. As the Web3 ecosystem evolves, future data cleaning challenges will likely focus on integrating AI for more automated processes and addressing ethical concerns to ensure fairness in these automated cleaning processes, particularly within blockchain networks where data permanence is a given.