Variational Autoencoder • VAE

What is a variational autoencoder?

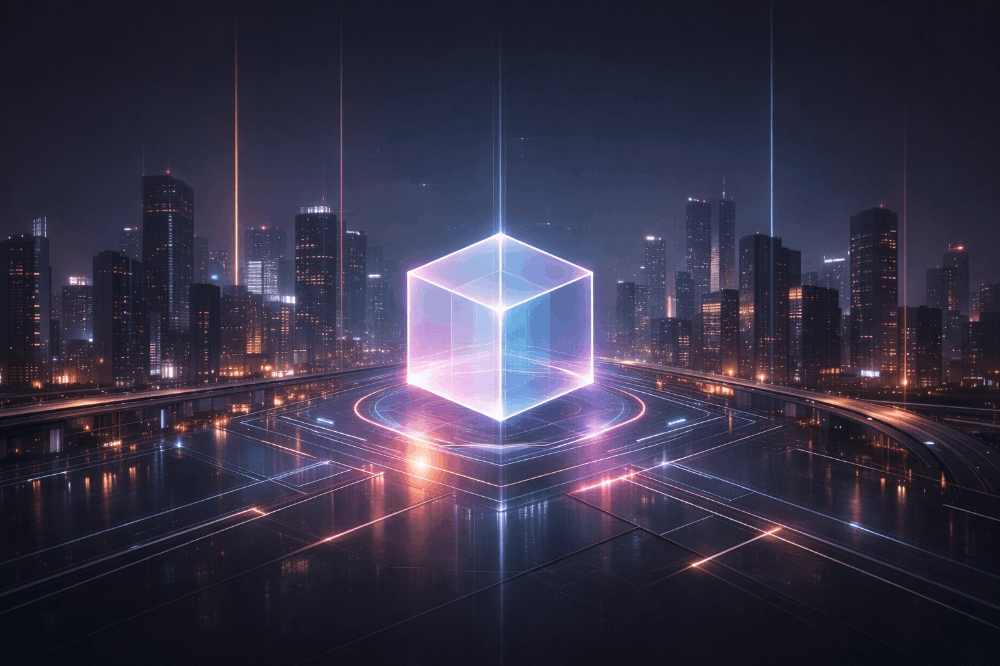

A Variational Autoencoder (VAE) is a type of artificial neural network used in the field of machine learning for the purpose of generating new data. It's a type of autoencoder, a neural network used for learning efficient codings of input data.

While a traditional autoencoder learns to compress data from the input layer into a short code, and then uncompress that code into the original data, a VAE turns the problem around. Instead of producing a single output (the compressed code), the VAE produces a statistical distribution of possible outputs. It then samples from this distribution to generate new data.

Here's a simplified explanation of how VAE works:

- Encoder: First, an encoder network turns the input data into two parameters in a latent space of representations.

- Random Sampling: We randomly sample similar points from the latent normal distribution that is assumed to generate the data.

- Decoder: Finally, a decoder network maps these random samples back to the original input data.

The parameters of the VAE are trained via two loss functions: a reconstruction loss that pushes the decoded samples to match the initial inputs, and a regularization loss, which helps learn well-formed latent spaces and reduce overfitting to the training data. The end result is a model that can generate new data that looks like the data it was trained on.

VAEs have been applied in a variety of applications such as generating human faces, handwriting styles, digital art, and more.

The basic scheme of a variational autoencoder. The model receives x as input. The encoder compresses it into the latent space. The decoder receives as input the information sampled from the latent space and produces x’ as similar as possible to x.