The Ethical Implications of Artificial Intelligence: Balancing Innovation and Responsibility

The allure of AI is undeniable. It offers us the keys to unlock mysteries that have long eluded human comprehension, from unraveling the complexities of climate change to decoding the human genome. In healthcare, AI algorithms are already outperforming human doctors in diagnosing certain diseases. In transportation, self-driving cars promise to make our roads safer and more efficient. The possibilities seem endless, limited only by our imagination and the exponential growth of computing power.

But as we rush headlong into this brave new world, we must pause to consider the ethical implications of our creations. For every problem AI solves, it seems to create a new ethical dilemma. As we delegate more decision-making to machines, we must ask ourselves: Who is responsible when AI makes a mistake? How do we ensure that AI systems are fair and unbiased? And perhaps most importantly, how do we prevent AI from being used in ways that harm individuals or society as a whole?

Consider the case of autonomous vehicles. On the surface, the idea of cars that can navigate our streets without human intervention seems like an unequivocal good. After all, human error is responsible for the vast majority of traffic accidents. But what happens when an autonomous vehicle is faced with an unavoidable accident? Should it prioritize the safety of its passengers over pedestrians? Should it make decisions based on the age or social status of potential victims? These are not merely technical questions, but profound ethical dilemmas that force us to confront our own values and moral frameworks.

The issue of bias in AI systems is another ethical minefield. AI algorithms are only as good as the data they're trained on, and if that data reflects societal biases, the AI will inevitably perpetuate and even amplify those biases. We've already seen examples of this in facial recognition systems that struggle to accurately identify people of color, or in hiring algorithms that discriminate against women. As AI becomes more deeply integrated into our society, there's a real risk that it could exacerbate existing inequalities and create new forms of discrimination.

Then there's the question of privacy and surveillance. AI-powered systems have an unprecedented ability to collect, analyze, and act upon vast amounts of personal data. This capability can be used for great good, such as improving public health outcomes or enhancing public safety. But it also opens the door to Orwellian scenarios of mass surveillance and control. How do we balance the potential benefits of AI-driven data analysis with the fundamental right to privacy? And who gets to make these decisions?

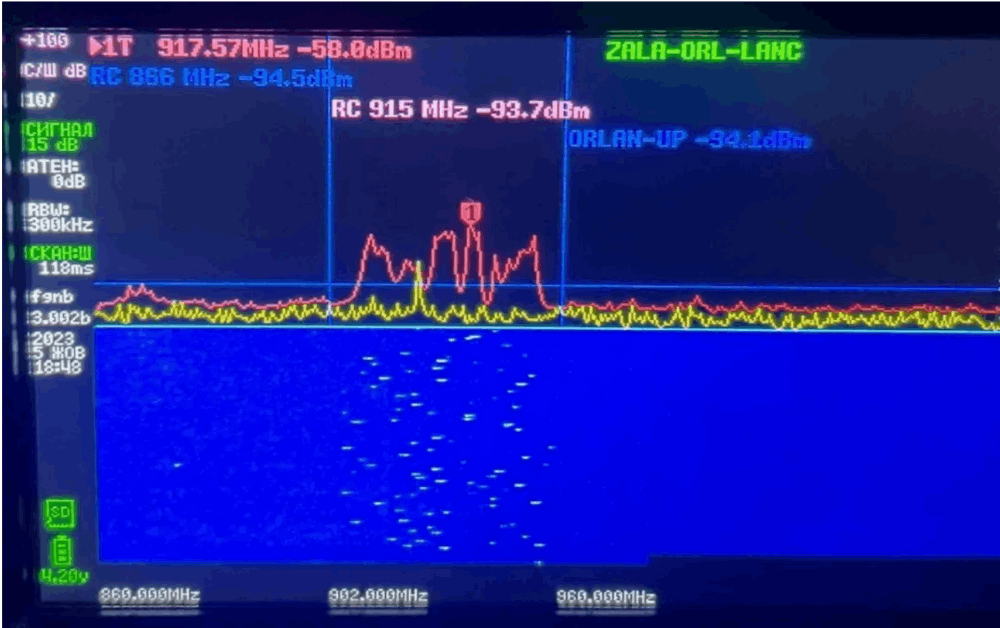

The use of AI in warfare and law enforcement presents its own set of ethical challenges. Autonomous weapons systems, capable of selecting and engaging targets without human intervention, are no longer the stuff of science fiction. While proponents argue that such systems could reduce military casualties and make warfare more "humane," critics warn of the dangers of removing human judgment from matters of life and death. Similarly, the use of predictive policing algorithms raises questions about due process and the presumption of innocence. If an AI system predicts that an individual is likely to commit a crime, how should law enforcement respond?

As AI systems become more sophisticated, we must also grapple with questions of consciousness and rights. At what point does an AI system become deserving of moral consideration? If we create artificial beings capable of experiencing suffering, do we have an ethical obligation to them? These may seem like abstract philosophical questions, but as AI continues to advance, they could become very real ethical and legal issues.

The challenge of AI ethics is compounded by the rapid pace of technological advancement. Our ethical frameworks and legal systems, developed over centuries, are struggling to keep up with the breakneck speed of AI innovation. By the time we've debated and legislated on one ethical issue, a dozen new ones have emerged. This creates a dangerous gap between our technological capabilities and our ethical readiness to wield them responsibly.

So, how do we bridge this gap? How do we ensure that our AI future is one that aligns with our values and serves the greater good of humanity?

First and foremost, we need to prioritize ethics in the development of AI from the very beginning. Too often, ethical considerations are treated as an afterthought, something to be addressed once the technology is already developed and deployed. Instead, we need to adopt an "ethics by design" approach, where ethical considerations are baked into the development process from the outset.

This means fostering a culture of ethical awareness and responsibility among AI researchers and developers. It means creating diverse and interdisciplinary teams that include ethicists, social scientists, and representatives from affected communities alongside technical experts. It means developing robust frameworks for assessing the ethical implications of AI systems before they're deployed.

We also need to prioritize transparency and accountability in AI systems. The "black box" nature of many AI algorithms, where even their creators can't fully explain how they arrive at their decisions, is incompatible with ethical use in many high-stakes domains. We need to develop AI systems that are explainable and interpretable, allowing for meaningful human oversight and intervention.

Education will play a crucial role in preparing society for an AI-driven future. We need to foster AI literacy not just among technical experts, but among policymakers, business leaders, and the general public. Only with a broad-based understanding of AI's capabilities and limitations can we have informed debates about its ethical use.

Regulation will inevitably play a role in ensuring the responsible development and deployment of AI. But given the global nature of AI development and the rapid pace of innovation, traditional regulatory approaches may not be sufficient. We need to explore new models of governance that can keep pace with technological change while still providing meaningful oversight.

Perhaps most importantly, we need to engage in an ongoing, global dialogue about the ethics of AI. This isn't a conversation that can be confined to academia or Silicon Valley boardrooms. It needs to involve diverse voices from around the world, representing different cultural perspectives and value systems. After all, the impacts of AI will be felt globally, and its ethical use must reflect global values.

As we navigate this ethical landscape, we must resist the temptation to view AI in simplistic terms of good or evil. AI is a tool, and like any tool, its impact depends on how we choose to use it. The same AI technologies that could be used to create autonomous weapons could also be used to solve global challenges like climate change or disease. The AI systems that could enable mass surveillance could also help us create more efficient and sustainable cities.

The key lies in our choices. Will we use AI to amplify our best qualities as a species - our creativity, our compassion, our drive to explore and understand? Or will we allow it to magnify our worst tendencies - our biases, our greed, our capacity for destruction?

The future of AI is not predetermined. It's a future we are actively creating with every decision we make today. By prioritizing ethics, fostering responsibility, and engaging in open dialogue, we can shape an AI future that enhances human flourishing and addresses the grand challenges facing our species and our planet.

The ethical implications of AI are not problems to be solved once and for all, but ongoing challenges that will require constant vigilance, adaptation, and reflection. As we continue to push the boundaries of what's possible with AI, we must never lose sight of the fundamental question: What kind of world do we want to create?

In the end, the greatest ethical challenge posed by AI may be this: Will we use this powerful technology to become better versions of ourselves, or will we allow it to diminish our humanity? The choice, as always, is ours to make.