The Ethical Implications of Artificial Intelligence: Navigating the Future

Artificial Intelligence (AI) has been making significant strides over the past few decades, transitioning from theoretical concepts in computer science to practical applications that influence nearly every aspect of modern life. From autonomous vehicles and medical diagnostics to personalized recommendations and intelligent personal assistants, AI technologies are rapidly transforming industries and reshaping society. However, this rapid advancement is accompanied by ethical considerations that must be addressed to ensure that the development and deployment of AI technologies are aligned with societal values and human rights.

THE PROMISE OF AI

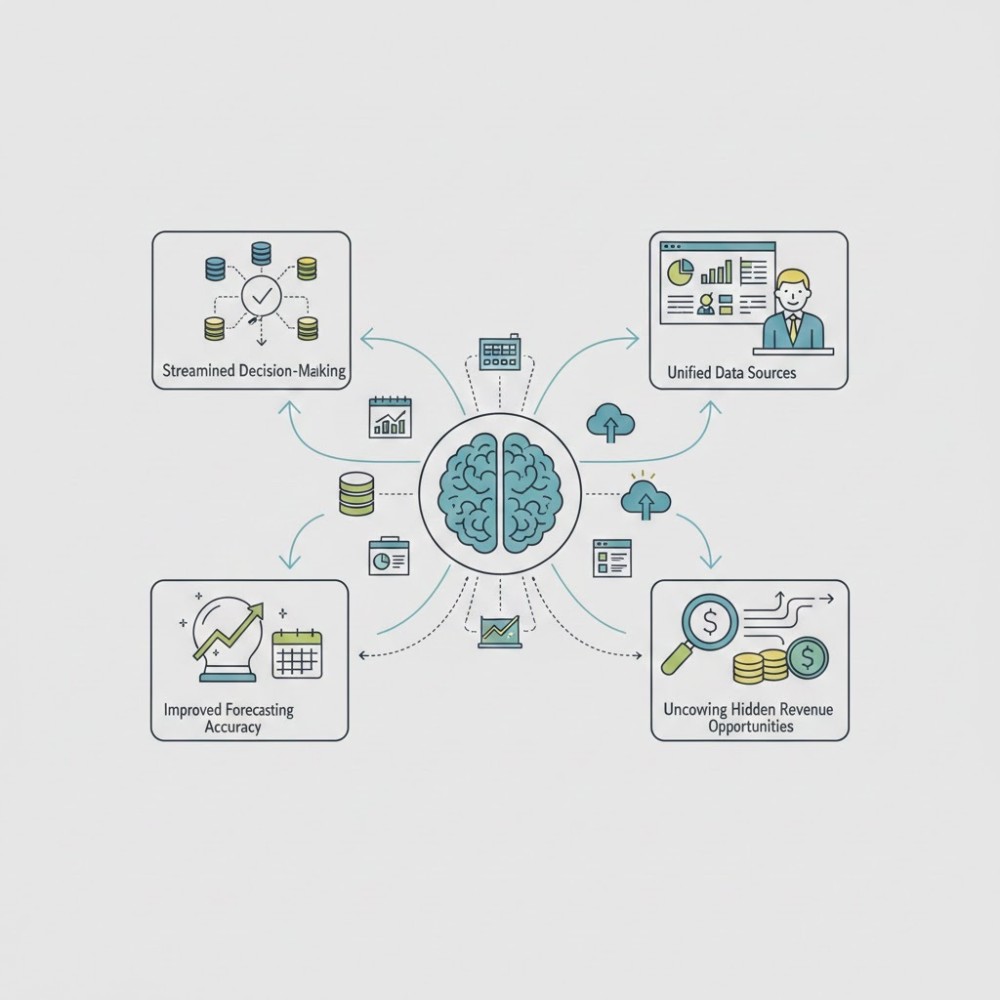

Before delving into the ethical challenges, it is essential to acknowledge the numerous benefits AI offers. AI systems can process and analyze vast amounts of data far more efficiently than humans, leading to innovations that can improve quality of life. In healthcare, AI can assist in early disease detection, optimize treatment plans, and even predict outbreaks of diseases. In transportation, autonomous vehicles promise to reduce accidents caused by human error, enhance mobility for those unable to drive, and decrease traffic congestion.

In the business sector, AI-driven analytics enable companies to make data-driven decisions, optimize operations, and enhance customer experiences. Additionally, AI-powered tools can help address environmental issues by optimizing energy use, improving waste management, and contributing to the development of sustainable practices.

ETHICAL CHALLENGES OF AI

Despite its potential, AI presents several ethical challenges that need to be addressed to ensure that its benefits are realized without compromising human values. These challenges include bias and fairness, privacy concerns, accountability, and the impact on employment.

BIAS AND FAIRNESS

One of the most significant ethical concerns in AI is the presence of bias in AI algorithms. AI systems learn from data, and if the training data is biased, the AI will likely produce biased outcomes. This can perpetuate and even exacerbate existing societal inequalities. For example, facial recognition technology has been shown to have higher error rates for people with darker skin tones, leading to concerns about its use in law enforcement and surveillance.

To address bias, it is crucial to ensure that training datasets are diverse and representative. Additionally, transparency in AI development and decision-making processes can help identify and mitigate biases. Researchers and developers must prioritize fairness and actively work to eliminate discriminatory practices from AI systems.

PRIVACY CONCERNS

AI's ability to process vast amounts of data raises significant privacy concerns. The collection and analysis of personal data can lead to intrusive surveillance and the erosion of individual privacy. For instance, AI-driven advertising platforms can track user behavior across different websites and devices, creating detailed profiles that can be used for targeted advertising. While this can enhance user experiences, it also raises questions about consent and the potential misuse of personal information.

Regulations such as the General Data Protection Regulation (GDPR) in the European Union aim to protect individuals' privacy and give them control over their data. However, there is a need for global standards and frameworks to address privacy concerns effectively. Companies developing AI technologies must adopt privacy-by-design principles, ensuring that privacy considerations are integrated into every stage of the development process.

ACCOUNTABILITY AND TRANSPARENCY

Another ethical issue is the accountability of AI systems. When AI systems make decisions that significantly impact individuals' lives, such as in healthcare or criminal justice, it is essential to have mechanisms in place to hold these systems accountable. However, the complexity and opacity of many AI algorithms make it challenging to understand how decisions are made, leading to concerns about transparency and trust.

Developers and organizations must strive for explainable AI, where the decision-making processes of AI systems can be understood and scrutinized. This can involve creating models that are interpretable by design or developing tools that provide insights into how complex models reach their conclusions. Additionally, establishing clear guidelines and regulations for the use of AI in critical areas can help ensure accountability.

IMPACT ON EMPLOYMENT

The impact of AI on employment is another significant ethical concern. While AI has the potential to create new jobs and industries, it also poses a risk of displacing workers, particularly in roles that involve routine tasks. The automation of jobs in manufacturing, transportation, and even some service sectors could lead to widespread unemployment and economic disruption.

Addressing this challenge requires proactive measures, such as investing in education and training programs to equip workers with the skills needed for the jobs of the future. Governments, businesses, and educational institutions must collaborate to create a workforce that can adapt to the changing job landscape. Additionally, exploring policies such as universal basic income or job transition programs can help mitigate the impact on displaced workers.

NAVIGATING THE ETHICAL LANDSCAPE

To navigate the ethical landscape of AI, a multi-stakeholder approach is essential. Governments, industry leaders, researchers, and civil society organizations must work together to develop ethical guidelines and standards that govern the development and deployment of AI technologies. These guidelines should be grounded in human rights principles, ensuring that AI systems respect human dignity, autonomy, and privacy.

ETHICAL AI FRAMEWORKS

Several organizations and initiatives are already working towards creating ethical AI frameworks. For example, the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has developed a comprehensive set of principles to guide the ethical development of AI. Similarly, the Partnership on AI, a consortium of tech companies, academic institutions, and civil society organizations, aims to promote responsible AI practices.

These frameworks often emphasize principles such as transparency, fairness, accountability, and inclusivity. However, translating these principles into practice can be challenging, requiring ongoing dialogue and collaboration among stakeholders.

REGULATION AND GOVERNANCE

Effective regulation and governance are crucial for ensuring that AI technologies are developed and used ethically. Governments play a key role in creating legal frameworks that protect individuals' rights and promote responsible AI practices. However, regulation must strike a balance between encouraging innovation and safeguarding ethical standards.

International cooperation is also essential, as AI technologies often have global implications. Collaborative efforts to develop harmonized standards and policies can help ensure that AI is used for the common good and that its benefits are distributed equitably.

As AI continues to evolve and become more integrated into our daily lives, addressing its ethical implications is paramount. While AI holds immense promise for improving quality of life and solving complex problems, it also presents significant ethical challenges that must be carefully navigated. By prioritizing fairness, transparency, accountability, and privacy, and by fostering collaboration among stakeholders, we can develop AI technologies that align with our values and contribute to a just and equitable society. The ethical journey of AI is ongoing, requiring constant vigilance and adaptation to ensure that the technology serves humanity's best interests.