Local Llama Setup: A Python Developer's Guide

As using chatGPTis API is becoming more and more expensive and number of tokens are limited there comes a point in your life that have to look for alternatives. Thats where Llama comes in!

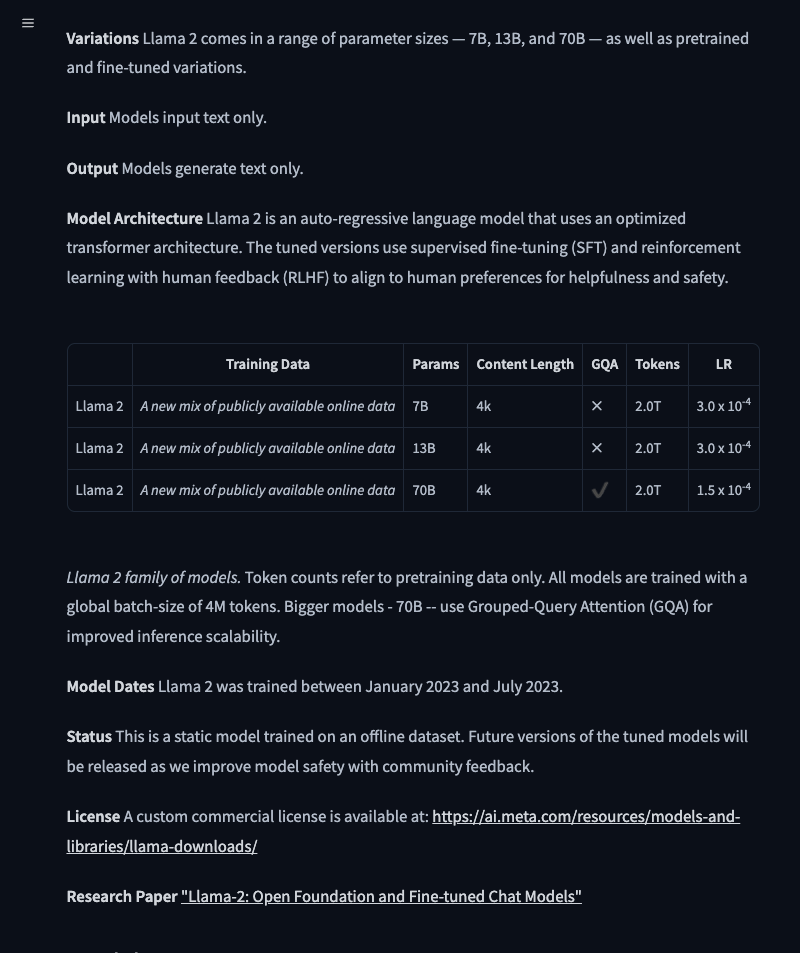

Alternatively you can use smaller models (3B parameters instead of 7B)

Use bitsandbytes for 8-bit quantization, which reduces memory usage significantly.

If you don’t have strong GPU can always outsource to cloud options that are out there like Google Colab, Hugging Face Inference API, RunPod

Accessing Llama Models

To start off Hugging Face is the primary platform used for accessing Llama models(e.g., meta-llama/Llama-2-7b-chat-hf).

Hugging Face - The AI community building the future.

We're on a journey to advance and democratize artificial intelligence through open source and open science.huggingface.co

Create your account in Hugging Face👆 to start using LLM models provided by Llama.

If your ambitious can as well create own model if not there are a bunch of models to choose from. 🤖

Most people including me just need text-to-text model so typical choose would be meta-llama/Llama-2–7b-chat-hf.

Once the model has been selected be sure to request access to the model by adding credentials.

huggingface-cli login

Then you will have to login in terminal to use the models. In your huggingface profile go to Settings > Access Tokens, generate your access token that you will paste in.

Using the Model

In your python app we should use conda instead of regular venv be sure to install it activating it is similar as venv.

Environments - Anaconda documentation

Environments in conda are self-contained, isolated spaces where you can install specific versions of software packages…docs.anaconda.com

//Required instalation for conda PyTorch conda install pytorch torchvision torchaudio cpuonly -c pytorch //Required python packages for huggingface etc pip install transformers accelerate sentencepiece huggingface_hub //To reduce memory usage you can as well install pip install bitsandbytes //Activate conda conda activate myenv

For this demonstration will just make it a simple as possible in main.py the power lies when implementing RAG (Retrieval-Augmented Generation)) or fine tuning the model.

import transformers

import torch

def main():

# Load Llama model using transformers pipeline

pipeline = transformers.pipeline(

"text-generation",

model="meta-llama/Llama-2-7b-chat-hf", # Replace with your model path if using a local model

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto"

)

# Start the Llama pipeline

while True:

# Get user input

query = input("\nYou: ")

# Exit condition

if query.lower() in ["exit", "quit"]:

print("Goodbye!")

break

# Handle the query

try:

# Construct the prompt

messages = [

{"role": "user", "content": query},

]

# Generate a response using the Llama model

outputs = pipeline(

messages,

max_new_tokens=256, # Adjust as needed

)

# Extract and print the response

response = outputs[0]["generated_text"][-1]["content"]

print(f"Bot: {response}")

except Exception as e:

print(f"Error handling query: {e}")

if __name__ == "__main__":

main()Note: The model i used computation heavy(CPU, GPU) and due to enormous parameters this particular 7 Billion parameters so if it doesn't break and hangs it may be due to weak PC.

Conclusion

As AI doesn’t seem to fade and hype keeps on going good good to be more familiarized with it if not building own model might as well implement in own project with fine tuning or implementing it with RAG.

Of course IF your PC can handle it. 😉