The Dark Side of Facial Recognition: Beyond Convenience Lies a Web of Concerns

The Dark Side of Facial Recognition: Beyond Convenience Lies a Web of Concerns

Development began on similar systems in the 1960s, beginning as a form of computer application. Since their inception, facial recognition systems have seen wider uses in recent times on smartphones and in other forms of technology, such as robotics. Because computerized facial recognition involves the measurement of a human's physiological characteristics, facial recognition systems are categorized as biometrics. Although the accuracy of facial recognition systems as a biometric technology is lower than iris recognition, fingerprint image acquisition, palm recognition or voice recognition, it is widely adopted due to its contactless process.[3] Facial recognition systems have been deployed in advanced human–computer interaction, video surveillance, law enforcement, passenger screening, decisions on employment and housing and automatic indexing of images.[4][5]

Facial recognition technology (FRT) has become ubiquitous. From unlocking smartphones to securing borders, FRT promises convenience and security. However, beneath this shiny veneer lurks a dark side, raising serious concerns about privacy, bias, and even the potential for a dystopian future.

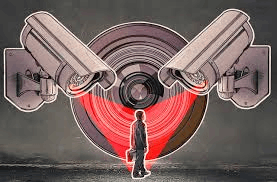

Privacy In Peril: A World Under Surveillance

FRT systems rely on vast databases of facial scans, often collected without explicit consent. This raises concerns about mass surveillance, where governments or corporations can track our movements and activities in real-time. The chilling prospect of constant monitoring erodes our sense of privacy and creates a climate of fear and self-censorship.

FRT algorithms are not infallible. Studies have shown they can be biased, particularly against people of color. This can lead to false positives, where innocent people are misidentified as criminals, or false negatives, where actual criminals go undetected. These biases, often reflecting existing societal prejudices embedded in the training data, can perpetuate discrimination and erode trust in law enforcement.

A Dystopian Nightmare: Facial Recognition as a Tool for Control

The potential misuse of FRT extends far beyond security. Authoritarian regimes can use it to suppress dissent and identify political opponents. Additionally, the ability to track individuals' movements and activities can be used to manipulate behavior and control populations. The specter of a dystopian future where facial recognition serves as a tool for oppression becomes a frightening possibility.

FRT undeniably offers benefits, but these must be weighed against the potential risks. Clear regulations are needed to limit data collection, ensure transparency in algorithm development, and establish robust oversight mechanisms. Individuals must be empowered to control their facial data and opt out of FRT systems they find intrusive.

The Future of Facial Recognition: A Choice We Must Make

The future of facial recognition technology hinges on the choices we make today. Do we prioritize security at the expense of our privacy and freedom? Or can we harness the power of FRT responsibly, ensuring it serves society without creating a surveillance state? The answer lies in fostering open dialogue, enacting responsible regulations, and demanding transparency from developers and governments alike.

The path forward requires a delicate balance, ensuring that facial recognition technology remains a tool for good, not an instrument of control.

References[edit]

- ^ "Face Recognition based Smart Attendance System Using IoT" (PDF). International Research Journal of Engineering and Technology. 9 (3): 5. March 2022.

- ^ Thorat, S. B.; Nayak, S. K.; Jyoti P Dandale (2010). "Facial Recognition Technology: An analysis with scope in India". arXiv:1005.4263 [cs.MA].

- ^ Chen, S.K; Chang, Y.H (2014). 2014 International Conference on Artificial Intelligence and Software Engineering (AISE2014). DEStech Publications, Inc. p. 21. ISBN 9781605951508.

- ^ Bramer, Max (2006). Artificial Intelligence in Theory and Practice: IFIP 19th World Computer Congress, TC 12: IFIP AI 2006 Stream, August 21–24, 2006, Santiago, Chile. Berlin: Springer Science+Business Media. p. 395. ISBN 9780387346540.

- ^ Jump up to:

- a b c d e SITNFlash (October 24, 2020). "Racial Discrimination in Face Recognition Technology". Science in the News. Retrieved July 1, 2023.

- ^ "Facial Recognition Technology: Federal Law Enforcement Agencies Should Have Better Awareness of Systems Used By Employees". www.gao.gov. Retrieved September 5, 2021.

- ^ Security, Help Net (August 27, 2020). "Facing gender bias in facial recognition technology". Help Net Security. Retrieved July 1, 2023.