The Ultimate Guide for Making the Best Career Choices in Tech

“How hard can it be to find a new Java developer?”

I was asked this question by our engineering director, frustrated with my lack of progress in finding a new software developer for our project.

By then, I had interviewed several candidates and concluded they would not be successful in the role.

Our team found a good fit a couple of weeks after the nudge, so I never had to explain the real reason why I did not recommend the previous candidates.

It was us, not them.

My reservation, which I helped the candidates understand, is that while they could meet the immediate requirements of the project — we would be fortunate to have them aboard — working with our technology stack was not an optimal choice for them in the long run.

With enough time passed and that entire project no longer active, I can explain my assessment using a structured framework.

In this story, I explain that framework and share a couple of personal anecdotes illustrating why it is relevant whether you are starting or well underway in your professional career.

The Framework: Hierarchy of Career Priorities

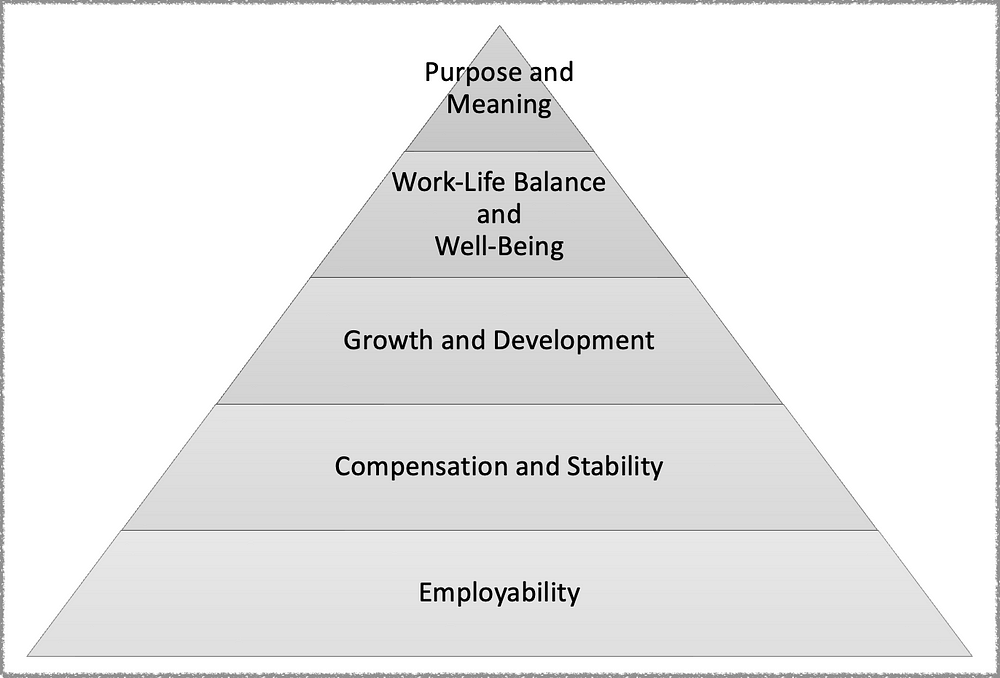

Let’s start with an adaptation of Maslow’s Hierarchy of Needs, which I’ll call the “Hierarchy of Career Priorities.” Hierarchy of Career Priorities: an adaptation of Maslow’s social theory of “Hierarchy of Needs.”

Hierarchy of Career Priorities: an adaptation of Maslow’s social theory of “Hierarchy of Needs.”

Like in Maslow’s theory of layers of priorities before reaching a state of self-actualization — where one has the continuous desire to become the most they can be — my framework for career success works in the same way, with analogous layers as follows:

- Employability. This foundational layer matches Maslow’s “Physiological Needs” layer. It represents the basic requirements for entering the workforce, including relevant education, training, degrees, and skills demanded by the job market.

- Compensation and Stability. This layer represents having a steady job, adequate compensation matching the required time and skills to do the job, and a supportive work environment.

- Growth and Development. This layer involves continuous learning, skill enhancement, and professional development. In this layer, a job allows and requires professionals to develop their skills to perform the role and retain their future employability. The layer also includes the viability and growth trends of the project’s business case.

- Work-Life Balance and Well-Being. This layer is about balancing work and personal life, supporting the employee’s overall well-being. This layer focuses on maintaining a healthy work-life balance, managing stress, and prioritizing mental and physical health.

- Purpose and Meaning. The pinnacle of the “Hierarchy of Career Priorities,” where individuals seek purpose and meaning in their chosen careers. This layer involves aligning personal values with the professional career, making a positive impact, and finding fulfillment beyond material and external rewards.

Ideally, employment choices should favor a good alignment of these layers. It is impossible to align them perfectly at all times, but awareness of eventual imbalances allows one to correct them sooner rather than later.

Here are a few common examples of such imbalances:

- A generous compensation package incentivizing people to sacrifice their well-being with long work weeks and stressful air travel routines.

- Overstaying in a role with a stagnant “growth and development” layer.

- Becoming overly skilled in an aging technology stack, trading growth in the current job for employability in the broader job marketplace.

I’ll reference this framework throughout the following sections, listing some of the most important lessons I learned about success and failure throughout my career.

Lesson #1: Technology Stacks Define Employability

This lesson is more of a blind spot for project managers and HR leaders than for software developers.

The advice of “Learn or Earn” works when you are starting in your career, but it morphs to “Learn or Don’t Earn” as you progress.

Within the last 40 years, the software industry went from co-dependent relationships of job-for-life using a few on-prem technology stacks to one of impossibly short tenures, leading employers to reconsider “job hopping” as a negative trait.

As a result, successful employees used to lifelong tenures now have to anticipate technology trends and grapple with the reality of becoming obsolete while standing still.

Note I am not passing a personal judgment on any technology. I am just reflecting on hiring practices in general, where employers increasingly use automation to match candidate resumes to opportunities.

HR folks may point to periodic formal training to stay current with trends while working with aging technology. However, there is a realization among software developers and employers that skills not practiced on the job dissipate quickly between forgetfulness and irrelevance.

As an example, when an employer needs someone with five years of experience in React, their vetting process will most certainly discard a resume showcasing a long experience in JavaServer Faces even if it contains a bullet point about a React class taken a couple of years ago. Conversely, any company using JavaServer Faces as their primary UI framework today will most likely struggle to attract new talent to their fold.

For those reasons, and acknowledging this may be a difficult realization for employers: From a continued employability perspective, a product technology stack is the most crucial factor for employees.

Yes, a bad boss can make one quit, but even the best of bosses will struggle to recruit people to work with a technology stack with dwindling demand in the job market.

While I understand product managers and organizations not being keen on rearchitecting or rewriting portions of the technology stack for employability reasons, they should consider a periodic conversation with HR leaders, trying to understand what effect their projects may have on the future employability of their staff.

Lesson #2: Three Years as the Period of Obsolescence

Technology stacks may stay relevant for a long time. Look no further than the enduring staying power of programming languages like COBOL or hardware technology like mainframes.

However, not all technology stacks stay relevant.

For everything else not powering the business core of the modern world, three years is a magic period where new technology can suddenly fall out of favor with the technical community.

If you are deciding on the next step of your career or the next hot technology to learn, skip the temptation of chasing what is popular today and look at what is trending towards popularity within the next three years.

Note that "three years" is primarily an empirical observation.

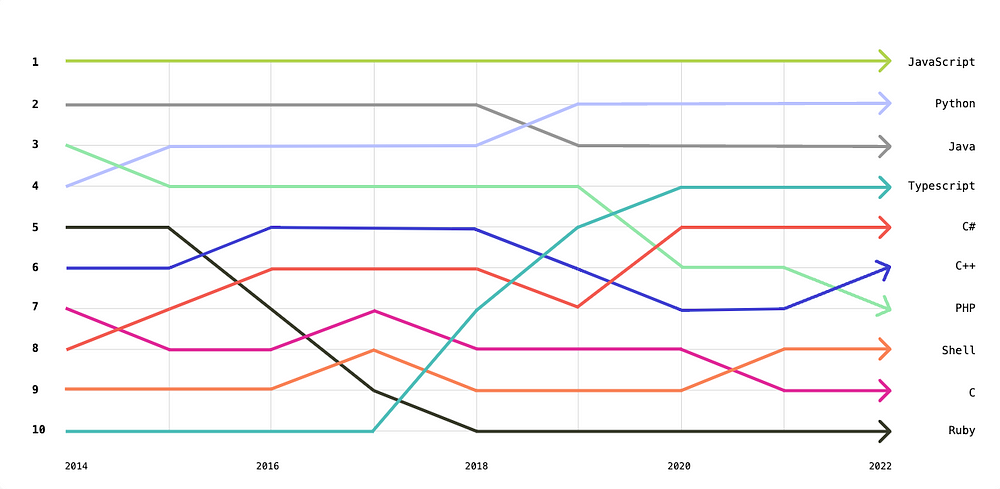

To illustrate that observation, let's start with the progression in the adoption of programming languages for the past decade, measured indirectly by GitHub's analysis of its user activity: Credit to GitHub’s article “The state of the Octoverse 2023

Credit to GitHub’s article “The state of the Octoverse 2023

Why use programming languages as a proxy for technology stacks? Because the adoption of programming languages correlates with shifts in the technology landscape, such as Ruby’s fortunes being tied to the popular Rails framework, COBOL adoption relying exclusively on the mainframe ecosystem, and C# being the programming language of choice for the .Net platform.

In three years, between 2015 and 2018, the Ruby programming language fell sharply through the rankings, from spot number 5 to number 10, fading behind Typescript’s growing popularity as a superset of the reigning champion in programming language’s popularity: JavaScript.

Incidentally, in an equal span of 3 years, between 2017 and 2020, Typescript sky-rocketed from number 10 to number 4.

And look at what happens to PHP, a stalwart of employability holding out against the proliferation of web-development frameworks all the way to 2019, when it started dropping quickly through the ranks from number #4 to #7 by 2022.

If you were starting your career today, mastering any programming language that is not mainstream within your field of interest is a liability for future employment prospects.

By “mainstream,” I mean choices such as Javascript for front-end development, Python for machine learning, and Go for systems development. You may disagree with my specific examples — for example, choosing Rust over Go — but the principle remains.

If you prioritize employability, find the moving center of the mainstream and aim for it.

The following section will cover the risk of overshooting that target.

Lesson #3: Watch Out for That Bleeding Edge

Anyone who regularly rode a bus has learned to make a critical judgment call.

That call happens when you are late walking up to the stop and can see from a distance that the bus is already loading the passengers. In that moment, you must decide whether to sprint toward the stop or wait for the next bus.

You also learn that chasing an already moving bus is rarely worth it.

While looking at future trends, keeping an eye on employability over hype is essential. I don’t mean people should ignore new trends — jumping on them can be rewarding in itself — but I would not advise people to go all in into those trends without a hedge on the “Growth and Development” layer being advanced in other ways, such as high-visibility or leadership positions.

Be advised that there is a lot of course-correcting and damage control to manage that edge while the users try to build worlds atop the moving blade. In other words, work-life balance tends to suffer.

My cautionary tale here comes from first-hand experience during the cut-throat years between 2013 and 2015 — another three-year span — where Cloud Foundry and Docker initially defined and dominated the container orchestration world.

My employer teamed up with the creator of Cloud Foundry and launched its public offering in early 2014. Known as Bluemix, that offering quickly became the world’s largest Cloud Foundry deployment, with over one million users. By many accounts, it was an unmistakable success.

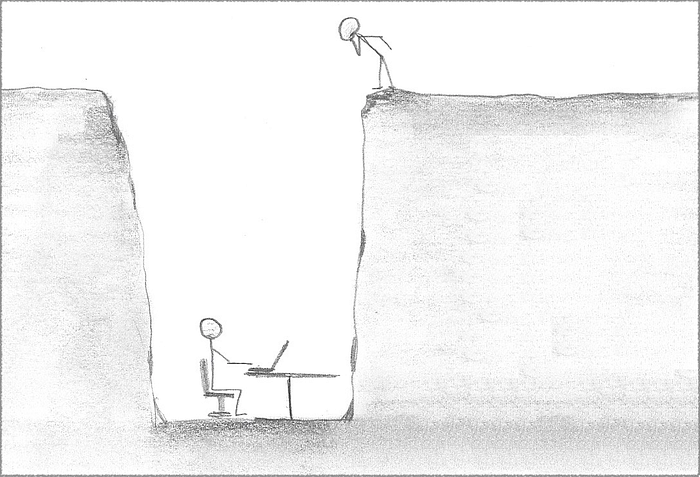

I joined that project from the onset as its production engineering lead, coming straight from a role as the technical leader for three software development teams. It was a wide lateral jump, so it was also a big gamble. There was one planet-scale obstacle in my jumping arc.

There was one planet-scale obstacle in my jumping arc.

Later that year, our company also made a parallel investment in a new offering based on an open-source project with a funny Greek name. Google released Kubernetes early in 2014 as an orchestrator of Cloud workloads. It had the pedigree of underpinning the mind-bending scale of Google’s own workloads.

With a billion-dollar investment and an all-star line-up of talent and developer advocates behind Kubernetes, the competition was over before it even started.

In the relatively short span of three years, Cloud Foundry and Docker Enterprise had lost all momentum in new deployments. Existing customers spent years grappling with the costly reality of re-platforming their workloads, retooling their CI/CD pipelines, and retraining their employees into a new world of Kubernetes clusters.

For me, it was not a wrong career move in absolute terms. I met people from different backgrounds, and the grueling demands of the on-call schedule for something at that scale meant a considerable compensation increase. And even after all these years, I am convinced that every software developer should rotate through operations for a few years.

Still, by the end of that transition, my Cloud Foundry skills were worthless in the job market.

Lesson #4: Beware the Highly Specialized Domain Projects

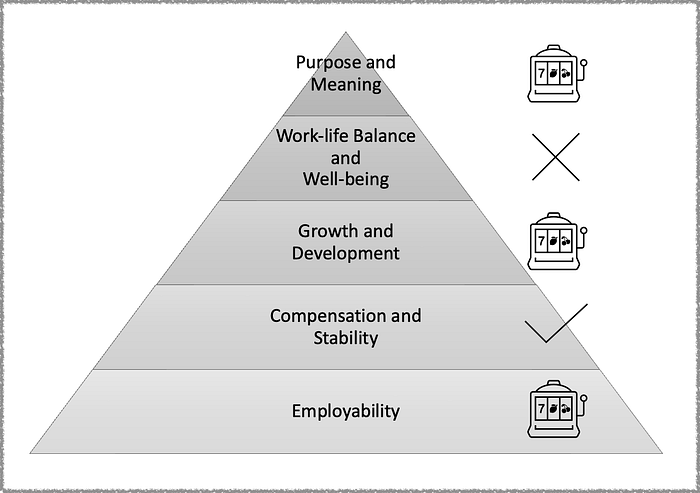

This lesson comes from the project I joined immediately after my Cloud Foundry stint. It is also the project mentioned earlier in this story, where we struggled to fill out a software development position.

The product was a hosted offering for healthcare providers in the oncology field. From a technology perspective, there was a strong focus on bioinformatics and natural language processing.

The most significant appeal for me was the opportunity to work with lab scientists and actual medical doctors. Applying NLP to help oncologists find alternative, life-saving care for cancer patients fulfilled the “Meaning and Purpose” layer in ways that no other project in my career ever had.

However, the technology stack was entering the three-year period where it would quickly fade behind the times, especially for a SaaS offering.

- Hosting? Everything ran on VMs. There was not a container in sight.

- Programming language? Java.

- Persistence layer? DB2.

- UI? AngularJS.

- Task management and source-code control? Jazz.

- CI? Ant and Jenkins.

- CD? Largely manual, with multi-hour planned maintenance windows.

With the unforgivable exception of using the aging Ant framework over Maven in the CI layer, everything else would have been a solid choice a couple of years before the project was commissioned.

In short, the choices were perfectly sensible, well-executed, and cost-effective. Decisions to stick with stack components were sound from a business perspective, but as an experienced software developer, this stack was the highway to skills obsolescence.

A small yet significant example is the usage of the Jazz source-code control. The year I joined the project was also the year our company formed its strategic partnership with GitHub, paving the way to replace Jazz with GitHub repositories for internal projects. Our project never migrated from Jazz because it made no business sense to forklift all code and build processes to a new source code control system, let alone retrain all developers to use Git.

Still, from the perspective of software developers, the “employability” value of Jazz skills trended toward irrelevance in the ensuing years, almost in inverse proportion to the value of Git, GitHub, and GitLab skills.

Moving to a more relevant example, querying all the alterations in a genetic sample stored in a database did not require a software developer to understand cancer biology. It required good communication with the actual cancer biologists on the team, explaining the immediate table structure representing the data and figuring out which attributes on a table represented those unique alterations.

At the same time, the database world was witnessing the expansion of job market demand for skills in MongoDB — the poster child of NoSQL databases — Cassandra, Neo4J, Apache Spark, and Hadoop.

And while Java was — and remains — a desirable skill in the job market, people working with the project backend watched Python slowly take over as the primary programming language for anything related to machine learning and natural language processing. If that was not sufficient, Facebook and Google released PyTorch and TensorFlow in rapid succession, increasing the pace of skills obsolescence for those working on machine learning problems in Java.

In a couple of years, our technology stack went from sensible to something no one would consider for a new project in the same domain. Ultimately, that is why we struggled to find new software developers while, at the same time, the sciences team managed to recruit increasingly more impressive talent.

Ultimately, that is why we struggled to find new software developers while, at the same time, the sciences team managed to recruit increasingly more impressive talent.

Even without this story’s career framework clear in my head at the time, I would be open with candidates about the trade-offs between the unique opportunity of working in a specialized domain and the negative impact on their long-term growth and development.

Some candidates had a specific interest in the bioinformatics and NLP components of the project. Since we were not hiring for those areas, I was equally open about eventual work on those components being strictly dependent on changes to their job roles and, therefore, not guaranteed.

I still cherish the experience, but here is the painful reality for software developers working with undifferentiated technology in a highly specialized domain: domain experts are essential; software developers are not.

That reality would be punctuated three years into the project when it became clear that demand would not translate to meaningful financial results.

The team was considerably downsized to stretch its budget runway while hoping for a turnaround. Anyone not working directly with bioinformatics or machine learning — most of its career software developers — was asked to find other opportunities with the company, myself included.

Lesson #5: There Is Always Time Until There Isn’t

Back to the bus-riding analogy, there is something worse than missing a bus: boarding the wrong one.

My cautionary tale in this story is that even though I was clear in my objective of shifting to Cloud-based software development for several years, in between a failed start with Cloud Foundry and an attractive side-step into the world of life sciences, six years went by in what today feels like the blink of an eye.

For someone mid-career, six years is a dangerously long detour.

It took me a new job and another three years to catch the Kubernetes bus and take a seat, becoming skilled on the platform and acknowledged as someone who could advise other developers and system administrators.

Nine years had passed. For someone starting fresh from college today, six years traveling in the wrong direction is a career-defining interval.

For someone starting fresh from college today, six years traveling in the wrong direction is a career-defining interval.

Many will point out that the “wrong direction” is relative and that having a diverse background before starting a software development role later in life is possible, even desirable.

I do not disagree. In fact, one of my favorite software developers has a degree in Literature and spent over a decade playing various musical instruments at shows before starting a successful career in software development.

However, once one gets in the groove of a career path in tech, indulging in career detours and missing consequential technology shifts can often derail their progress for quite a while.

Yes, we always have time, but career steps are measured in years, and the margin for recovery decreases with each passing year.

Conclusion

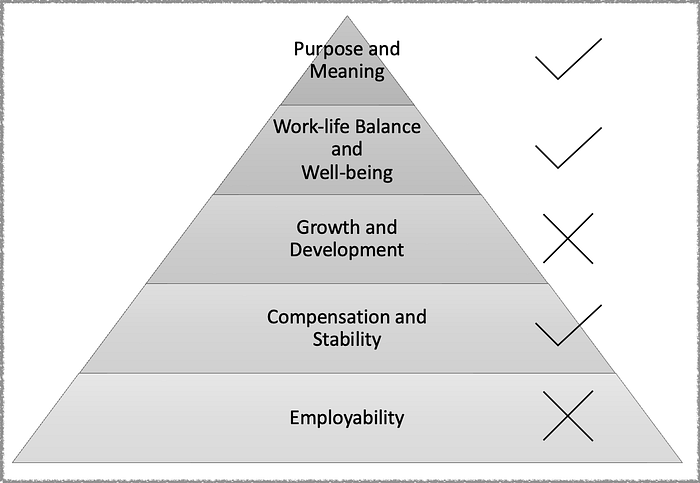

My best career advice to software developer professionals, well, any professional, is to continuously monitor their career progression against the layers of their “Hierarchy of Career Priorities.”

There are no wrong career paths, but there are certainly careless steps. Something as simple as placing an “up” or “down” arrow next to each layer in the pyramid can go a long way toward helping one make the best decision about staying in their current role or moving to a new one.

With awareness, one may optimize for one or more layers, such as choosing a higher compensation over a more interesting job or sacrificing their work-life balance for faster progress toward their life goals.

One may also skip hierarchy layers altogether, such as deciding to work for a dream non-profit with virtually no compensation or betting on a startup gig with compensation tied to the uncertain guarantee of the company’s success.

However, those imbalances tend to catch up with the natural order of priorities in the pyramid. The imbalances manifest in different ways, such as depression induced by lack of meaning on the job, burnout from long stretches of 80-hour work weeks, or deteriorating employability due to working with obsolete technology stacks.

And dream jobs may not be that satisfying when one is constantly awakened from the dream by unexpected bills or stressing out with personal finances between irregular paychecks.

No matter the chosen path, having a framework to evaluate your career is your best tool for making judgment calls, whether extending a bet on a risky choice or bailing out when a job becomes a potential liability to your career prospects.

That framework is also invaluable for companies gauging their attractiveness to prospective candidates, especially when technologies can shift faster than projects can respond to those changes.

And finally, keep aiming for purpose and meaning.

Be successful.

![[ℕ𝕖𝕧𝕖𝕣] 𝕊𝕖𝕝𝕝 𝕐𝕠𝕦𝕣 𝔹𝕚𝕥𝕔𝕠𝕚𝕟 - And Now What.... Pray To The God Of Hopium?](https://cdn.bulbapp.io/frontend/images/79e7827b-c644-4853-b048-a9601a8a8da7/1)