Deep Learning (Part 1):Understanding Basic Neural Networks

Description:

This blog post aims to provide a basic understanding of neural networks, a fundamental concept in the field of artificial intelligence and machine learning. Starting from the basics, we will explore the structure, functioning, and applications of neural networks. Whether you are a beginner or a professional, this guide will equip you with the knowledge to grasp the core concepts and unleash the potential of neural networks.

Sections:

Definition of neural networks

Definition of Deep learning

History of Deep Learning

Basic Neural Networks

How does the Biological and Artificial Neuron work?

Section- 1- Definition of neural networks

Def: Neural networks, also called artificial neural networks (ANNs) or simulated neural networks (SNNs), are a subset of machine learning and are the backbone of deep learning algorithms. They are called “neural” because they mimic how neurons in the brain signal one another. People get a lot of ideas from nature — like planes, which were based off of birds [8]. For example, airplanes were invented inspired by birds[8]. A neural network is a type of machine-learning algorithm that is inspired by the human brain. It is a network of interconnected nodes, or artificial neurons, that learn to recognize patterns in data.

Def: Artificial Neural Networks (ANNs) are computational models inspired by the structure and functioning of biological neural networks in the human brain. ANNs consist of interconnected nodes, or “neurons,” that process and transmit information.

Application: Complex machine-learning problems such as image classification, recommendation systems, and language-to-language translation have been solved with this technique [8].

Section -2- Definition of Deep learning

Def: The term, Deep Learning, refers to training Neural Networks, sometimes very large Neural Networks.

Def: Deep learning refers to the process of involving a system that thinks and learns exactly like humans using an artificial neural network Def:.Deep learning is a subset of machine learning that uses artificial neural networks to learn from data. the “deep” in deep learning refers to the depth of layers in a neural network.

Def: A neural network of more than three layers, including the inputs and the output, can be considered a deep-learning algorithm.

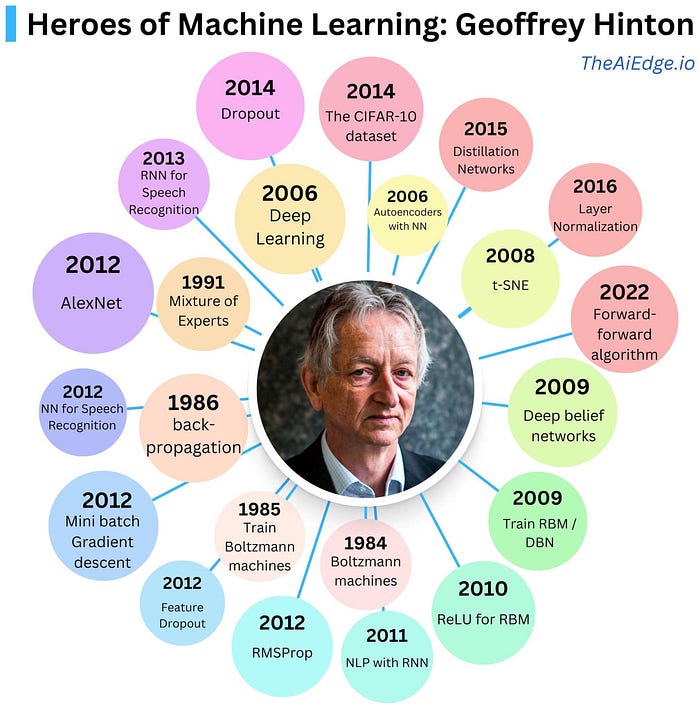

Section -3- History of Deep learning

The first ANN was proposed in 1944, but it has become very popular in recent years [7]. Deep learning was introduced in the early 50s but it became popular in recent years due to the increase in AI-oriented applications and the data that is being generated by the companies. While classical machine learning algorithms fell short of analyzing big data, artificial neural networks performed well on big data [8]. The history of deep learning can be traced back to the early days of artificial intelligence (AI). In 1943, Warren McCulloch and Walter Pitts created a mathematical model of neurons in the brain, which was the first artificial neural network.

- In the 1950s, Frank Rosenblatt developed the perceptron, a simple two-layer neural network that could be trained to recognize patterns. However, the perceptron had limitations, and it was not until the 1980s that neural networks began to be used more widely.

- In the 1980s, Geoffrey Hinton and others developed a new type of neural network called the backpropagation algorithm. Backpropagation allowed neural networks to learn more complex patterns, and it led to a renewed interest in neural networks.

- In the 1990s, deep learning research continued, but it was still a relatively niche field. However, in the early 2000s, there were a number of breakthroughs that led to a resurgence of interest in deep learning.

- One of the most important breakthroughs was the development of the convolutional neural network (CNN). CNNs are a type of neural network that is specifically designed for image processing. They have been used to achieve state-of-the-art results in a variety of image recognition tasks, such as face recognition and object detection.

- Another important breakthrough was the development of the recurrent neural network (RNN). RNNs are a type of neural network that is specifically designed for processing sequential data. They have been used to achieve state-of-the-art results in a variety of natural language processing tasks, such as machine translation and speech recognition.

- In the past decade, deep learning has made significant progress in a wide variety of tasks, including image recognition, natural language processing, speech recognition, and machine translation. It is now one of the most active and promising areas of research in AI.

Here are some of the major milestones in the history of deep learning:

- 1943: Warren McCulloch and Walter Pitts create a mathematical model of neurons in the brain.

- 1958: Frank Rosenblatt develops the perceptron, a simple two-layer neural network that can be trained to recognize patterns.

- 1986: Geoffrey Hinton and others develop the backpropagation algorithm, which allows neural networks to learn more complex patterns.

- 1998: Yann LeCun et al. develop the LeNet-5 CNN, which achieves state-of-the-art results in handwritten digit recognition.

- 2006: Geoffrey Hinton et al. develop the Deep Belief Network, which is a type of neural network that can be pre-trained on a large amount of unlabeled data.

- 2012: Alex Krizhevsky et al. develop the AlexNet CNN, which achieves state-of-the-art results in image classification.

- 2014: Ilya Sutskever et al. develop the Transformer, a new type of neural network that is specifically designed for natural language processing tasks.

- 2015: Google Translate uses deep learning to achieve human-level performance in machine translation.

- 2016: AlphaGo defeats a professional Go player using deep learning.

Section 4- Basic Neural network

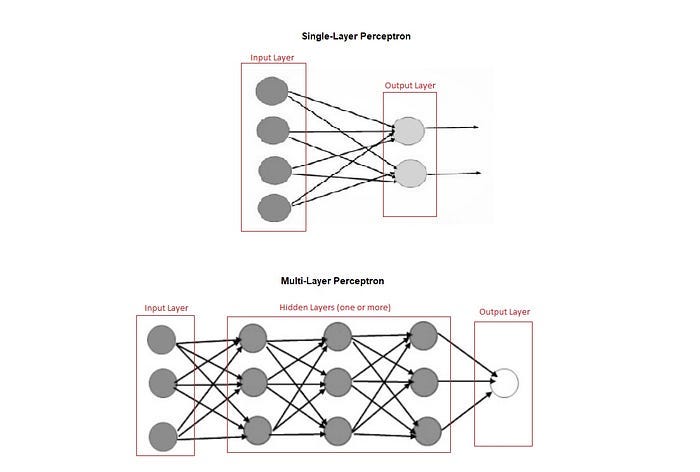

4.1- Single-layer Perceptron

Perceptron is an algorithm that makes the neurons learn from the given information. It is of two types, Single-layer Perceptron does not contain hidden layers. Whereas, Multi-layer Perceptron contains one or more hidden layers. Single-layer Perceptron is the simplest form of an Artificial neural network (ANN). A single neural network is a neural network that has only one layer of neurons. This type of network is also known as a perceptron. Perceptron's are the simplest type of neural network, and they can be used to solve simple problems. This architecture was developed by Frank Rosenblatt in 1957 [8].

A single neural network is a neural network that has only one layer of neurons. This type of network is also known as a perceptron. Perceptron's are the simplest type of neural network, and they can be used to solve simple problems. This architecture was developed by Frank Rosenblatt in 1957 [8].

Single layer neural network with Example

Single layer neural network with Example

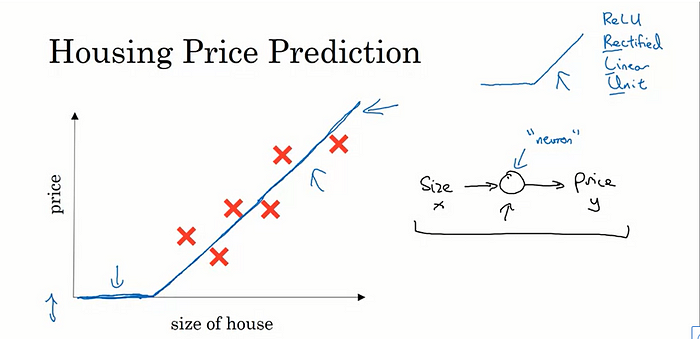

Let’s start with a Housing Price Prediction example. Let’s say you have a data set with six houses, so you know the size of the houses in square feet or square meters and you know the price of the house and you want to fit a function to predict the price of a house as a function of its size. So if you are familiar with linear regression you might say, well let’s put a straight line to this data, so, and we get a straight line like that. But to be put fancier, you might say, well we know that prices can never be negative, right? So instead of the straight line fit, which eventually will become negative, let’s bend the curve here. So it just ends up zero here. So this thick blue line ends up being your function for predicting the price of the house as a function of its size. Where it is zero here and then there is a straight line fit to the right. So you can think of this function that you have just fit to housing prices as a very simple neural network. It is almost the simplest possible neural network. Let me draw it here. We have as the input to the neural network the size of a house which we call x. It goes into this node, this little circle and then it outputs the price which we call y. So this little circle, which is a single neuron in a neural network, implements this function that we drew on the left. And all that the neuron does is it inputs the size, computes this linear function, takes a max of zero, and then outputs the estimated price. And by the way in the neural network literature, you will see this function a lot. This function which goes to zero sometimes and then it’ll take of as a straight line. This function is called a ReLU function which stands for rectified linear units. So R-E-L-U. And rectify just means taking a max of 0 which is why you get a function shape like this. You don’t need to worry about ReLU units for now but it’s just something you will see again later in this course. So i f this is a single neuron, neural network, really a tiny little neural network, a larger neural network is then formed by taking many of the single neurons and stacking them together. So, if you think of this neuron that’sbeing like a single Lego brick, you then get a bigger neural network by stacking together many of these Lego bricks.

5- Multi-layer neural network

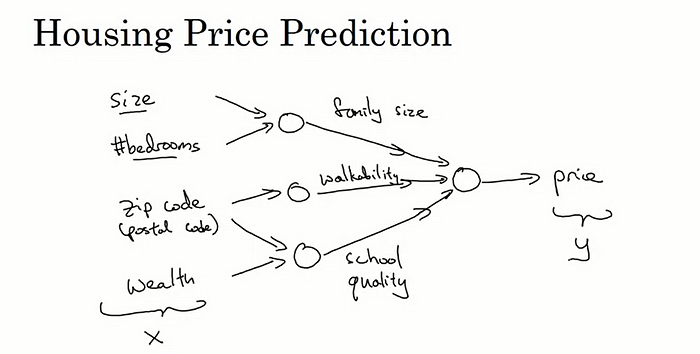

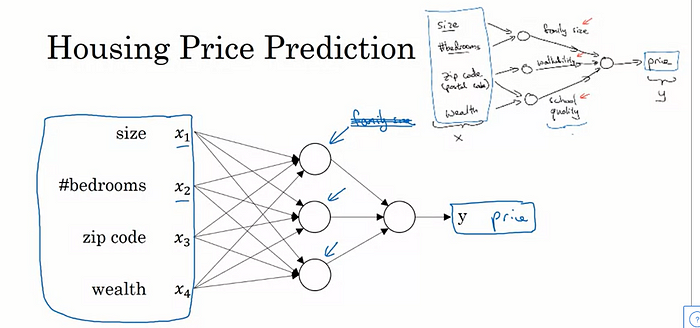

Let’s see an example. Let’s say that instead of predicting the price of a house just from the size, you now have other features. You know other things about the house, such as the number of bedrooms, which we would write as “#bedrooms”, and you might think that one of the things that really affects the price of a house is family size, right? So can this house fit your family of three, or family of four, or family of five? And it’s really based on the size in square feet or square meters, and the number of bedrooms that determines whether or not a house can fit your family’s family size. And then maybe you know the zip codes, in different countries it’s called a postal code of a house. And the zip code maybe as a feature tells you, walkability? So is this neighborhood highly walkable? Think just walks to the grocery store? Walk to school? Do you need to drive? And some people prefer highly walkable neighborhoods. And then the zip code as well as the wealth maybe tells you, right. Certainly in the United States but some other countries as well. Tells you how good is the school quality. So each of these little circles I’m drawing, can be one of those ReLU, rectified linear units or some other slightly non linear function. So that based on the size and number of bedrooms, you can estimate the family size, their zip code, based on walkability, based on zip code and wealth can estimate the school quality. And then finally you might think that well the way people decide how much they’re willing to pay for a house, is they look at the things that really matter to them. In this case family size, walkability, and school quality and that helps you predict the price.

So in the example x is all of these four inputs. And y is the price you’re trying to predict. And so by stacking together a few of the single neurons or the simple predictors we have from the previous slide, we now have a slightly larger neural network. How you manage neural network is that when you implement it, you need to give it just the input x and the output y for a number of examples in your training set and all these things in the middle, they will figure out by itself. So what you actually implement is this. Where, here, you have a neural network with four inputs. So the input features might be the size, number of bedrooms, the zip code or postal code, and the wealth of the neighborhood. And so given these input features, the job of the neural network will be to predict the price y. And notice also that each of these circles, these are called hidden units in the neural network, that each of them takes its inputs all four input features. So for example, rather than saying this first node represents family size and family size depends only on the features X1 and X2. Instead, we’re going to say, well neural network, you decide whatever you want this node to be. And we’ll give you all four input features to compute whatever you want. So we say that layer that this is input layer and this layer in the middle of the neural network are densely connected. Because every input feature is connected to every one of these circles in the middle. And the remarkable thing about neural networks is that, given enough data about x and y, given enough training examples with both x and y, neural networks are remarkably good at figuring out functions that accurately map from x to y. So, that’s a basic neural network. It turns out that as you build out your own neural networks, you’ll probably find them to be most useful, most powerful in supervised learning incentives, meaning that you’re trying to take an input x and map it to some output y, like we just saw in the housing price prediction example.

So the input features might be the size, number of bedrooms, the zip code or postal code, and the wealth of the neighborhood. And so given these input features, the job of the neural network will be to predict the price y. And notice also that each of these circles, these are called hidden units in the neural network, that each of them takes its inputs all four input features. So for example, rather than saying this first node represents family size and family size depends only on the features X1 and X2. Instead, we’re going to say, well neural network, you decide whatever you want this node to be. And we’ll give you all four input features to compute whatever you want. So we say that layer that this is input layer and this layer in the middle of the neural network are densely connected. Because every input feature is connected to every one of these circles in the middle. And the remarkable thing about neural networks is that, given enough data about x and y, given enough training examples with both x and y, neural networks are remarkably good at figuring out functions that accurately map from x to y. So, that’s a basic neural network. It turns out that as you build out your own neural networks, you’ll probably find them to be most useful, most powerful in supervised learning incentives, meaning that you’re trying to take an input x and map it to some output y, like we just saw in the housing price prediction example.

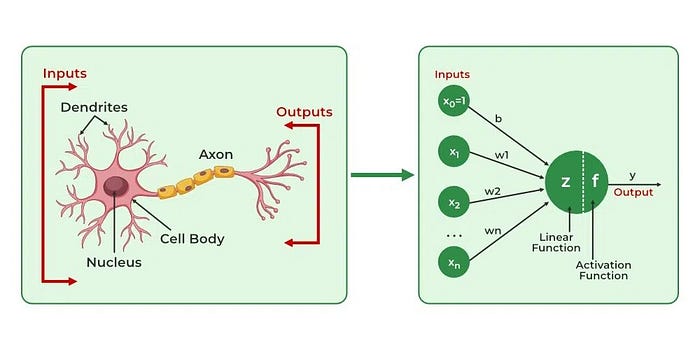

6- How does the Biological and Artificial Neuron work?

The brain is the most complex part of the human body and researchers are still uncovering its working. To better understand the Brain let’s consider a simple examples.

Example 1

Imagine a ball coming towards us. What would be our first reaction mostly likely we will our hands to catch the ball. Behind the scene, this can be translated into input and output. our eyes sense the incoming ball and sent input signals to our brains. The brain receives these signals, analyzes them, and generates the output reaction of moving our hand to catch the ball.

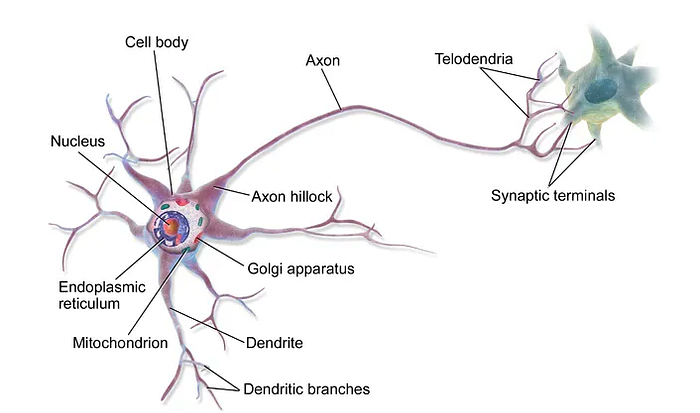

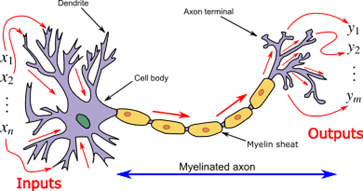

In biological term, brain cells have perceptrons or receivers known as dendrites that receive electrical singal as input data from out sensory organs such as the eyes, nose,skin etc. The brain cells analyze these signals and respond by releasing reachtion singnals through axon terminals as output data.

Example 2

Let me explain a scenario when you touch a hot object with your hand, you would feel the pain and remove the hand immediately. This action and reaction are done within a fraction of a second. Have you ever got a feeling that how this is happening? Well, they are trillions of neurons connected in the body when you touch a hot object the electrical impulse will travel from the neurons in your hand to the neurons in your brain. Then the decision is taken and immediately electrical impulse travels back to the neurons in hand instructing to remove it.

Explanation

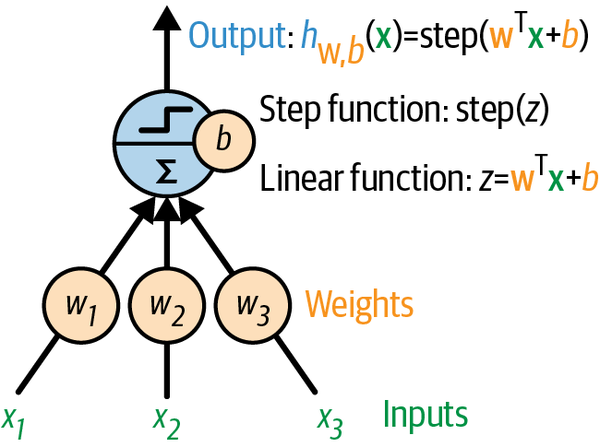

Inside neurons, Dendrites act as neuro receptors nothing but the input layer. Axons act as neurotransmitters nothing but the output layer. The nucleus is where the action potential is compared to the threshold. If the action potential is greater than the threshold, the electrical impulse will transmit to another neuron. If the action potential is lesser than the threshold, the electrical impulse won’t transmit to another neuron. A biological neuron operates through a series of simple electrochemical processes.It receives signals from other neurons through its dendrites. When these incoming signals add up to a certain level (a predetermined threshold), the neuron switches on and sends an electrochemical signal along its axon. This, in turn, affects the neurons connected to its axon terminals. The key thing to note here is that a neuron’s response is like a binary switch: it either fires (activates) or stays quiet, without any in-between states [9].

A biological neuron operates through a series of simple electrochemical processes.It receives signals from other neurons through its dendrites. When these incoming signals add up to a certain level (a predetermined threshold), the neuron switches on and sends an electrochemical signal along its axon. This, in turn, affects the neurons connected to its axon terminals. The key thing to note here is that a neuron’s response is like a binary switch: it either fires (activates) or stays quiet, without any in-between states [9].

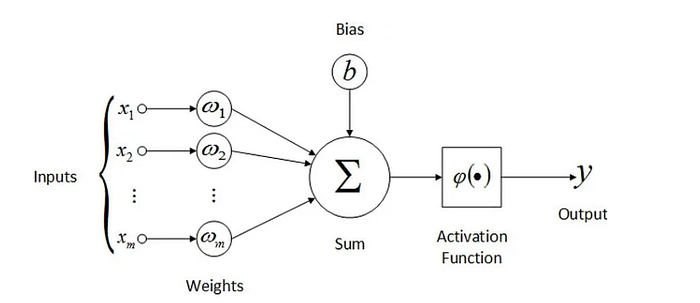

Similarly, artificial neurons receive the information from the input layer and transmit the information to other neurons through the output layer. Here, the neurons are connected and certain weights are assigned to that particular connection. These weights represent the strength of the connection and they play an important role in the activation of the neuron. The bias is like an intercept in the linear equation.

Similarly, artificial neurons receive the information from the input layer and transmit the information to other neurons through the output layer. Here, the neurons are connected and certain weights are assigned to that particular connection. These weights represent the strength of the connection and they play an important role in the activation of the neuron. The bias is like an intercept in the linear equation.

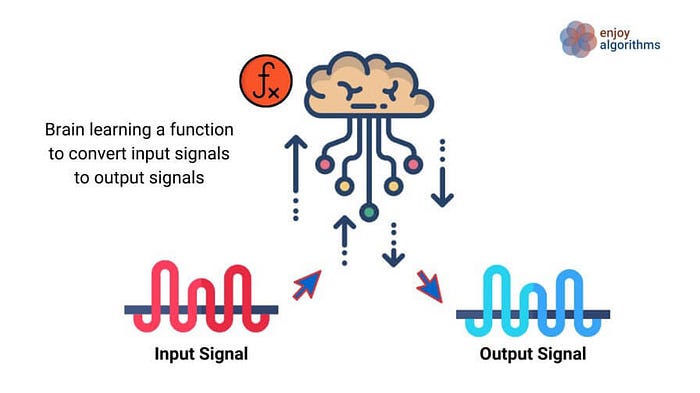

Here, the inputs (x1 to xn) get multiplied with corresponding weights (w1 to wn) and then they get summated along with the bias. The result would be taken as input for the activation function this is where the decision happens and the output of the activation function is transferred to other neurons. There are different types of activation functions some are linear, step, sigmoid, RelU, etc. In our childhood we may have struggled with catching balls . However, through practice our brain eventually learns how to do so. During this process the brain effectively learns the mapping function from inputs to outputs. In other word the process of learning a mapping function through repeated experience is referred to as intelligence in living things. Now the critical question is What if some nonliving things start doing the same?

In our childhood we may have struggled with catching balls . However, through practice our brain eventually learns how to do so. During this process the brain effectively learns the mapping function from inputs to outputs. In other word the process of learning a mapping function through repeated experience is referred to as intelligence in living things. Now the critical question is What if some nonliving things start doing the same?

can we develop the same capability for our computer . that where the concept of Machine learning come into play

Please Follow and 👏 Clap for the story courses teach to see latest updates on this story

If you want to learn more about these topics: Python, Machine Learning Data Science, Statistic For Machine learning, Linear Algebra for Machine learning Computer Vision and Research

Then Login and Enroll in Coursesteach to get fantastic content in the data field.

Stay tuned for our upcoming articles because we reach end to end ,where we will explore specific topics related to Deep Learning in more detail!

Remember, learning is a continuous process. So keep learning and keep creating and Sharing with others!💻✌️

Note: if you are a Deep Learning export and have some good suggestions to improve this blog please share through comments and contribute.

For More update about Deep Learning Please follow and enroll:

Course: Neural Networks and Deep Learning

📚GitHub Repository

📝Notebook

Do you want to get into data science and AI and need help figuring out how? I can offer you research supervision and long-term career mentoring.

Skype: themushtaq48, email: mushtaqmsit@gmail.com

Contribution: We would love your help in making coursesteach community even better! If you want to contribute in some courses , or if you have any suggestions for improvement in any coursesteach content, feel free to contact and follow.

Together, let’s make this the best AI learning Community! 🚀

👉WhatsApp

👉 Facebook

👉Github

👉LinkedIn

👉Youtube

👉Twitter

Source

1-AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the difference?

2- Google Bar

3-Neural network and Deep leaning(Andrew)

4-A Short Journey To Deep Learning

5-What is a Neural Network (Video)?

6-How Neural Networks work (Video)

7- Introduction of Machine Learning

8-Artificial Neural Networks in Machine Learning

9-A Brief History of the Neural Networks